In This Article

What is Cache Line?

In simple words, when the cache memory is separated into partitions of equal size, these partitions are called the cache lines.

Cache line refers to the block of memory that is moved to the cache memory. This cache line is usually fixed in size and ranges from 16 bytes to 256 bytes. However, the size of the cache line will depend largely on the type of application.

KEY TAKEAWAYS

- Cache line is actually the block of memory of fixed size that is moved to the cache memory.

- The cache line can be of any size ranging between 16 bytes and 256 bytes and will primarily depend on the application type.

- The idea of cache line is based on the principle of locality of reference and it reads the entire line instead of it in single bytes and caches it straightaway.

- A cache line typically holds the data, address tag, Meta data, and a few other control bits depending on its design.

Understanding Cache Line

Some people however get confused and mix up cache memory and cache line and think that they are both the same.

Well, this shows their ignorance and need to know more about cache lines.

The good news is that to gain such additional knowledge, such users do not have to search any further because they are already in the right place, fortunately.

This article is just right for such types of users which includes the technical and non-technical aspects of cache line, its function and more.

In order to understand cache lines, you will first need to know about the cache memory itself.

There are a few specific attributes of cache memory which are:

- It is a Random Access Memory.

- It lies in the way between the main memory and the processor of the computer system and

- It is responsible to bridge the mismatch in speeds between the slower main memory and the faster processor of the computer system.

Ideally, the cache memory is made up of three basic components such as:

- Some amount of RAM or Random Access Memory to store the data for the cache

- Some extra amount of RAM to store other metadata and the tags and

- A controller that is responsible for moving the data around and also for tag lookups.

Ideally, the cache line or cache block refers to the smallest unit of the cache memory or the fixed number of words held by each cache entry.

It is this unit that is moved from the cache memory to the main memory of the computer system.

You can configure the cache circuits by using the system designer into a different line size.

In addition to that, there are also a lot of other algorithms that you can use in order to adjust the cache line size more dynamically in real time.

The Concept and Advantages

The concept of cache line is based on the principle of locality of reference and takes the full advantage of it.

The full line is read and cached straight away instead of reading a single byte or a word from the main memory of the computer system at a time.

The advantages of the cache line concept are varied and many but the two major ones are as follows:

- When the locations are followed in particular and if one specific location is read then the locations that are close by are most likely to be read one after the other and

- Reading of the consecutive locations will be much faster because it will be much easier and faster to access these locations in a sequence by taking the full advantage of the page-mode Dynamic Random Access Memory or DRAM.

Ideally, when the cache system of a computer is designed, the size of the cache lines is considered to be a vital parameter because it affects a lot of other factors in the caching system.

Effects of Changing Cache Lines

As said earlier, you can configure the cache lines or cache blocks but there are some effects of such changes that you should also be aware of.

These effects may vary according to the situations on which the changes are made in the first place.

Effects of changing the cache line size on spatial locality:

Ideally, the larger the block size, the better the spatial locality will be.

However, when the cache size is kept constant, there are two types of effects based on two different conditions as follows:

- When you decrease the block size, it will hold a smaller number of nearby addresses in it. This means that only a smaller number of these addresses will be brought into the cache. This will increase the chances of cache misses which, in turn, will reduce the utilization of the spatial locality. In short, when the block size is small, the spatial locality will be inferior.

- And, the opposite happens when you increase the block size. Meaning, it will hold a larger number of nearby addresses in it and therefore a large number of those will be brought to the cache. This will increase the chances of cache hits and, in turn, the utilization of the spatial locality. In short, when the block size is large, the spatial locality will be better.

Effects of changing the cache line size on the cache tag in a direct mapped cache:

Typically, the cache tag is not affected in any way by the block size in a direct mapped cache.

Once again, when you keep the size of the cache constant while changing the cache line size, there are two specific effects and results of it as follows:

- When you decrease the size of the block, it will increase the number of lines in it. This will however decrease the number of bits in the block offset but the addition of the number of cache lines increases the number of bits in the line number. This means that there will be no specific effect on the cache tag because the total number of bits in line number and in the block offset will remain constant.

- And, when you increase the block size, the opposite happens. This means that the number of lines in the cache decreases, which, in turn, increases the number of bits in the block offset. Since the number of cache lines is now reduced, the number of bits in the line number will be less as well. Once again, this will not have any significant effect on the cache tag because the sum of the number of bits in the line number and that in the block offset will remain constant.

Effects of changing the cache line size on cache tag in totally associative cache:

Ideally, in such situations when you reduce the size of the cache line the cache tag will also be decreased and vice versa.

When the size of the cache is kept constant but the block size is changed, these are the two specific effects that may happen:

- When the size of the block is reduced, it will also reduce the number of bits in the block offset which will however increase the number of bits in the cache tag.

- And, when you increase the size of the block, it will also increase the amount of bits in the block offset. This will however, reduce the number of bits in the cache tag.

Effects of changing the cache line size on cache tag in set associative cache:

Once again, in such situations, the cache tag will not be affected in any way whatsoever.

When the size of the cache is kept constant and the changes in cache lines are made, the effects it will have are as follows:

- When the size of the block is reduced, it will increase the number of lines in the cache and at the same time it will reduce the number of bits in the block offset. However, the increased number of cache lines will increase the number of sets in the cache as well which will eventually increase the number of bits in set number. This means that there will be no effect on the cache tag because the total number of bits in set number as well as in the block offset will be constant.

- And, when you increase the size of the block, the reverse happens. This means that such an increase will simultaneously increase the number of lines in the cache as well as in the number of bits in the block offset. However, the number of sets in the cache will be decreased when the number of cache lines is reduced which will consequently reduce the number of bits in the set number. This, once again, will not have any significant effect on the cache tag because the total number of bits in the set number and in the block offset will remain constant.

Effects of changing the cache line size on cache miss penalty:

Ideally, a lower cache miss penalty will result in a cache block of smaller size.

This is because when a cache miss happens, it is required to bring the block holding the necessary word from the main memory of the system.

Whether this miss penalty will be more or less will depend on the following conditions:

- If the size of this block is pretty small, it will take much less time to bring the block from the main memory to the cache. Therefore, this will result in less miss penalties.

- On the other hand, if the size of the block is large enough so that it takes longer time to bring that block from the main memory to the cache it will incur more miss penalty.

Effects of changing cache tag on cache miss penalty:

Cache hit time refers to the time needed for finding out whether or not the necessary block is within the cache.

When the cache tag is smaller in size, it will result in lesser cache hit time.

This entails comparing the two tags, one for the generated address and the other of the cache lines.

And, there are two specific effects of it depending on the size of the cache tag such as:

- When the cache tag is smaller in size, the time taken to make such comparisons will be less. This means that the cache hit time will be much lower if the cache tag itself is smaller in size.

- On the other hand, if the size of the cache tag is larger, the time taken to perform the comparisons between the tags will be much more. This means that the cache hit time will be quite higher if the size of the cache tag is larger.

So, considering all these effects of changing cache lines it can be concluded that a smaller sized block memory will result in lower cache miss penalty. The results can be further summarized as:

- Larger the block size is equal to better spatial locality

- Smaller block size does not mean smaller cache tag and

- Larger cache tag means larger cache hit time.

Technical Aspects

Technically, the tag RAM, in particular, holds the information of the addresses of the data that is stored in the cache.

It is necessary to keep the size of the address information reasonable and so the cache is usually split into different blocks of uniform size.

These are called cache lines.

Though there is no golden rule, typically, the most common sizes of the cache lines are:

- 32 bytes

- 64 byes and

- 128 bytes.

If you have a cache of 64 KB and the cache line size in 64 bytes, then the cache will have 1024 lines.

This means that the cache will need to track only those 1024 different addresses in order to define the contents available in that cache.

However, in some cases, the cache line may signify the data only but colloquially the cache line typically refers to the data as well as the metadata related to that data which includes the status, address and more.

Usually, the data is brought into the CPU through the cache lines and it will do so irrespective of the size of the data that is actually being read.

However, for example, if a processor brings in around 64 bytes of data at a time and if the desired word is not present in the cache line holding the byte the processor will request the 64 bytes that start at the boundary of the cache line.

This is the largest address less than the one required which is a multiple of 64.

Typically, all modern computer memory modules can transfer 64 bits or 8 bytes of data at a time.

This is usually done in a burst of 8 transfers. This means that a single command will trigger a read or write of an entire cache line from the memory.

In general, in all SDRAMs or Synchronous Dynamic RAM whether it is DDR1, DDR2, DDR3, or DDR4, the burst transfer size can be programmable up to 64 bytes.

This is pretty common though the CPUs usually select the size in order to match with the size of the catch line.

If the processor has to prefetch memory access not being able to forecast it, it will take about 90 nanoseconds or about 250 clock cycles for the retrieval process which involves knowing the address and receiving the data by the CPU.

On the other hand, if there is a L1 cache hit on any modern x86 CPU, the load-use latency would be about 3 or 4 cycles and the store-reload or store-forwarding latency would be about 4 or 5 cycles.

It is the same across all other architectures.

For example, a cache line of 64 bytes will be divided into non-overlapping or distinct blocks of memory where the start address of every block will have the lowest 6 address bits to be always zero bits.

It is not required to increase the address space while sending these six zero bits each time by 64 times for any given quantity of address bus width.

Apart from reading in advance and freeing or saving 6 bits on the address bus, the cache lines also solves another problem which is the organization of the cache memory itself.

For example, if a cache is divided into 64 bits or 8 byte cells or blocks, then the address of the memory cell is needed to be stored in the cache cell that holds its value.

However, if the address itself is 64 bits, then half the size of the cache will be consumed by it which will result in 100% of overhead.

This problem is solved by the cache line since it is 64 bytes in size and it is likely that the CPU will use 64 bit – 6 bit = 58 bit.

This means that it will not need to store the zero bits and 64 bytes or 512 bits can be cached with an 11% overhead of 58 bits.

In reality, the addresses stored are even much smaller than this.

However, there are different bits of status information such as valid and accurate cache lines that need to be written back in the RAM.

And then, in the set associative cache, every cache cell may not be able to hold a particular type of address but can store only the subsets of them.

This means that the bits of the required stored address are even smaller which allows accessing the cache in parallel.

This means that you can access every subset only once that is free from the other subsets.

It is very important to synchronize cache or memory access among the various virtual cores or the independent multiple processing units in each core and the several processors on one main board.

For that it is good to have cache lines that are 64 bytes wide because these will then correspond to memory blocks that start on the addresses that are typically divisible by 64.

The offsets into a cache line are usually the least significant 6 bits of an address.

Therefore, for any particular byte the cache line that needs to be fetched will be found easily by clearing these 6 bits of the address which is typically done by rounding down to the closest address that can be divided by 64.

However, all these are done by the relevant hardware.

Cache Line Functions

Now, knowing about the technicalities related to the cache lines, it will be easier for you to understand the functions of these memory blocks.

But first, it is good to know what the cache lines typically hold. Ideally, the cache lines hold:

- The data itself

- The address tag which enables it to differentiate between different physical addresses mapping to the cache line and

- The metadata which is ideally a valid bit along with a few other control bits which depend on the particular design.

This means that, in fact, the modern caches do not actually cache only one memory address. Instead, more often than not, these single cache lines contain data that are worth several bytes.

This means that all of these can be accessed in just one single operation and it also allows exploiting the spatial locality inside the memory.

It is for this reason the term cache block is often used interchangeably by some users since it signifies the data section solely.

Now, take a look at the functions of the cache lines.

The primary function of the cache lines is to act as the unit or medium to transfer data between the memory of the system to the cache.

Normally, the cache line has 64 bytes and the CPU will read or write the whole cache line when any location within this 64 byte area is being written or read.

Sometimes, as said earlier, the processor will also need to prefetch cache lines by evaluating the pattern of memory access of a thread.

It is very important to organize the access around the cache lines. This is because it will have notable consequences for the performance of the applications.

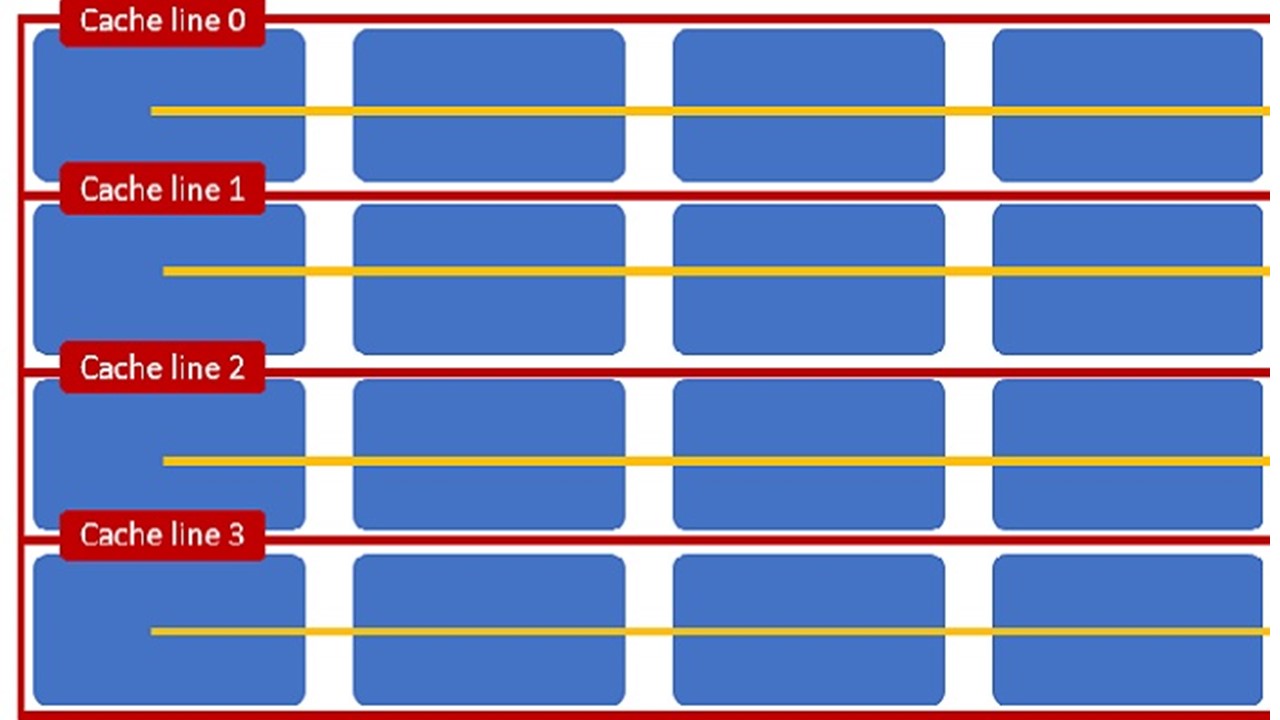

For example, when an application needs to access a two dimensional array which fits the different cache lines, the access will result in the following:

- When the processor is fetching the first row of the cache line, say cache line 0, of the two dimensional array on the first access and the cache line 0 is within the cache, it will be able to read successive items from the cache directly.

- And, when the processor prefetches cache line 1 even before it attempts to access the memory area corresponding to that cache line, it may not be possible easily.

Considering that the data structures are accessed column-wise, things may not go very well.

Here the process will actually commence with the cache line 0 but it will soon need to access and read in cache line 1 as well as in the other cache lines as well.

In such situations, every access will incur an overhead of the main memory.

However, if the cache is full, there is a high chance that successive interaction with every column may result in repetitive fetching of the cache lines.

Cache lining allocation and functions allow better cache organization that allows storing data structures by value.

This is especially helpful for those who prefer vectors and arrays stored by value.

For example, for a cache line allocation for a vector pointer – vector<Entity*> = it is quite possible that the Entity objects will be allocated and located far away in the memory if each of the Entity objects are assigned from the heap.

This means that with every access to each of the entities it will cause a complete cache line to be loaded.

On the other hand, if the vectors store entities by value – vector<Entity> – only, the Entity objects will be much more organized in the adjoining memory.

This will minimize the load of the cache lines significantly.

Another significant function of the cache lines is to keep the validity flags of the array entry in a different descriptor array.

This is important because the cache lines sometimes need to be reevaluated for provisional access to the array elements.

Typically, the validity of the array element is decided by a flag within the array element.

There is a ‘for’ loop that will help in cache line loads even for those specific cache entries that are to be left out.

When the validity flags are kept into different descriptor arrays, the performance of the cache improves considerably.

In such situations, the validity descriptors are loaded from one single cache line which means that the application will have fewer cache lines loaded.

This is because the cache lines for the entries of the invalid arrays will be omitted and also due to the fact that the array content and the descriptor will be separated.

Cache lines also eliminate the chances of false sharing by avoiding sharing the lines between the threads.

As you may know, the CPU die comes with Level 1 and Level 2 caches that are dedicated to each of the cores in it, and the Level 3 cache is typically shared.

This division of the cache architecture in different tiers often results in coherency issues among them.

For example, when the core on the far left reads a memory location which loads cache line 0 into the Level 1 cache of it and the far right core tries to read access and read a memory location which is also on the cache line 0, the CPU will need to set off the cache coherency process.

This modus operandi will ensure coherent representation of the cache line 0 across all available caches.

However, this is a costly process and also results in a lot of waste of memory bandwidth.

This eventually annuls the benefits offered by caching memory.

Any application that is caching-optimized will suffer due to the induced lag of cache coherency.

This will result in false sharing because the cores will usually operate on alternate entries within the array and access separate memory addresses.

This may double the output of a single core but that will happen only in the case of shared descriptors.

The descriptor array should be small enough to fit into a single cache line.

This problem can be easily resolved with the use of different cache lines because then the descriptors for the different cores will occupy them separately.

Therefore, the functions of the cache lines are quite varied and are quite important to make sure that all the cores of a multi-core processor performs in a much better way as desired.

Conclusion

Therefore, after reading this article now you surely know a lot about the cache line or a data block, what it contains, where it fetches the data from as well as a lot of technical aspects.

All these will surely entice you if you are pretty tech savvy.