In This Article

What is Cache Only Memory Architecture (COMA)?

Cache Only Memory Architecture, often denoted as COMA, refers to a specific computer memory organization. This type of architecture is usually found in the multiprocessors where the local memory such as the Dynamic Random Access Memory or the DRAM is typically used as cache in every node.

Ideally, this is what makes the COMA much different from the Non Uniform Memory Access NUMA organizations wherein the local memory is typically used as the main memory.

COMA is a complex architecture which may not be easy for any average user to understand just like that.

KEY TAKEAWAYS

- Cache Only Memory Architecture is a special type of memory organization typically found in the multiprocessors.

- There are no particular home nodes in COMA which reduces the chances of creating a number of redundant copies making it more productive and better.

- The design of COMA is similar to NUMA but the shared memory here is the cache memory and the data is moved to the processor that requested it.

- There is no memory hierarchy in COMA but there is an address space consisting of all caches. There is however a cache directory which helps in accessing it remotely.

Understanding Cache Only Memory Architecture (COMA)

If you want to understand COMA fully, you will need to go through different technical as well as non-technical aspects which will need a lot of research and visits to multiple websites.

This is quite a hassle and will take a lot of time.

Ideally, COMA or Cache Only Memory Architecture is a sort of CC-NUMA or Cache Coherent Non Uniform Memory Access architecture.

However, there is a slight difference in COMA and CC-NUMA in the form of the shared memory module, which is actually a cache.

Here, every memory line comes with a tag which includes:

- The state and

- The address of the line.

Technically speaking, every CPU references a line and it probably displaces a valid line from the memory to bring out both its close-by locations of the local or NUMA shared memory and the private caches more evidently.

Each of these shared memories behaves like a large cache memory in effect. This gives the name COMA.

The Cache Only Memory Architecture actually enhances the chances of availability of data locally.

This is because the COMA hardware replicates it automatically and transfers it to the memory module of the node that is being accessed currently.

The benefit of it is that the probabilities of recurring long latency during accessing memory are significantly reduced.

This is all due to the ability of COMA to adapt the shared data more dynamically.

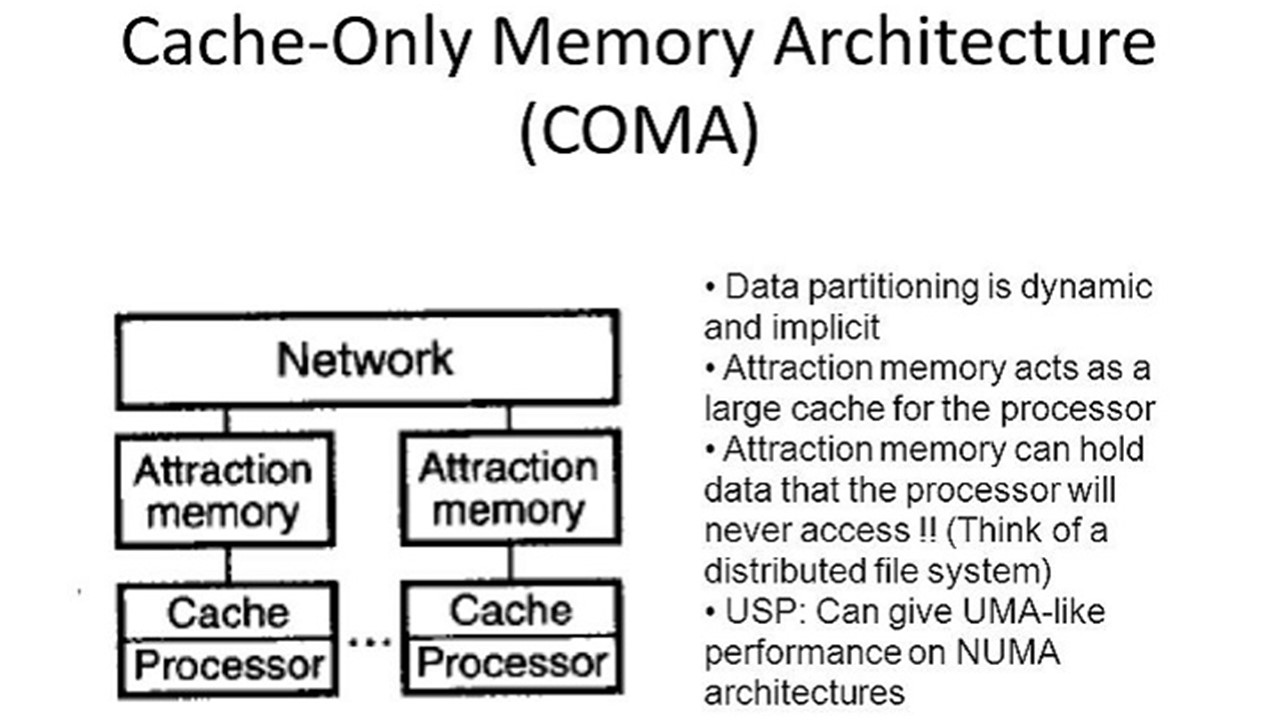

Ideally, a COMA machine comes with a range of processing nodes that are connected through an interconnection network.

Each of these processing nodes comes with different useful components such as:

- A cache

- A high performance processor and

- A distribution of the global shared memory.

Usually, the COMA machines exclude the primary memory blocks from the local memory of nodes.

These machines also use only the big caches as the node memories.

Both these features help the machines to eradicate the issues related to static memory allocations of CC-NUMA and NUMA machines.

In these particular architectures there are only cache memories available instead of any main memory implementation either in the form of a distributed primary memory as is available in the CC-NUMA and NUMA machines or in the form of a shared central memory as in the UMA computers.

Use of Memory Resources

The Cache Only Memory Architecture allows much better utilization of memory resources in comparison to NUMA.

This is because in the NUMA machines or organizations, usually in the global address space every address is assigned with a definite home node.

In this type of cache organization, a copy of the data accessed by the processor is made in the local cache but the space typically is allocated in the home node.

On the other hand, in comparison, the COMA does not have any home nodes.

Accessing data from a remote node may cause it to migrate from its original position.

Therefore, when compared with NUMA architecture, the Cache Only Memory Architecture reduces the quantity of redundant copies.

And, at the same time allows much better and more productive use of the memory resources.

Drawbacks

Good as they are, the Cache Only Memory Architecture, just like any other thing, is not devoid of issues or drawbacks.

One of the most significant issues is finding specific data since there is no home node.

Usually, the hardware memory coherence mechanism is used to put data migration into practice.

There is also another major problem with COMA which is typically experienced when the local memory is full and some data is needed to be migrated.

Typically, it does not know what exactly to do because transferring some data to the local memory will need evicting some other data already residing in it.

But then, there is no ‘home’ to go to.

In addition to the above, there are also a few other specific issues in the COMA that need to be looked into and addressed.

These are:

- Block replacement

- Block localization and

- Memory overhead.

There have been a lot of studies conducted and researches made to find the ways to successfully do away with these issues.

A lot of different ways have been developed in due course of time such as:

- New migration policies

- Different forms of directories

- Policies for read-only copies and

- Policies for keeping free space in the local memories.

Apart from that, creating new hybrid NUMA-COMA organizations such as reactive NUMA mode have also been planned.

This, the developers thought, would allow the pages to begin in the NUMA mode and then switch over to COMA mode when and if required.

Also, a software-based hybrid NUMA-COMA system was proposed which will allow creating a multiprocessor system with shared memory which will be out of the group of commodity nodes.

Representatives

Usually, there are two representatives of the Cache Only Memory Architecture such as:

Data Diffusion Machine

Commonly referred to as DDM, this is a tree-like, hierarchical multiprocessor.

The fundamental DDM architecture is based on a single bus and contains different processor or attraction memory pairs.

All these are connected with the DDM bus.

There is also an additional Attraction Memory that itself come with three major units namely:

- The controller

- The state and data memory unit and

- The output buffer.

Typically, the Data Diffusion Memory implements a non-parallel split-transaction bus.

This means that the bus is released between its request and response to a transaction.

A write nullify snoopy-cache protocol is set up as well which restricts broadcast necessities to the smaller subsystems and at the same time provides support for a replacement.

KSR1

The Kendall Square Research or KSR1 machine is considered to be the first COMA machine that was available commercially.

In this machine there is a logically solitary address space. This is realized by a group of local caches and the ALLCACHE Engine.

This particular engine understands a sequential and constant cache coherence algorithm.

This is typically based on the Distributed Directory Scheme.

This scheme is actually a matrix. In this matrix there is a row and column allocated for each subpage as well as a column for every local cache.

If the local cache corresponding to a matrix element does not have any copy of the subpage, then the matrix element is empty.

However, as a common case, the matrix is usually very sparse which is why it is stored in a condensed manner by excluding the empty elements.

Typically, the non-empty elements represent four specific states such as:

- Exclusive Owner or EO which is the single valid copy in the entire system

- Non-exclusive Owner or NO which represents the different copies of the subpage existing in the system

- Copy or C which represents two valid copies at least of the subpage that exists in the system and

- Invalid or I which will not be used even though a copy of the subpage may exist in the local cache.

The ALLCACHE engine holds the data in pages and subpages.

Ideally, the page or the unit of memory allotment in the local caches contains 16 Kbytes and the subpage consists of 128 bytes, which is actually the unit of data transfer between the local caches.

Design and Working Process

The design or structure of the Cache Only Memory Architecture is a bit similar to the NUMA machines where each processor comes with a portion of the shared memory.

However, in COMA, the shared memory comprises cache memory, as said earlier, and the system migrates the data to the processor that requests it.

The address space consists of all the caches but there is no memory hierarchy.

However, there is a cache directory, D, which allows remote access to the cache.

In spite of that, in COMA the hardware can eliminate a specific set of remote memory accesses transparently.

This is usually done by changing the memory modules into big DRAM caches.

These caches are called Attraction Memory and are expressed as AM usually.

When there is a request made by the processor from a block from a remote memory, the block is placed in both the AM of the node as well as in the cache of the processor.

However, when there is a need for space by a block, some other block can be removed from the AM.

With such kind of support provided, the processor can ideally and dynamically attract the working set only into the neighboring memory module.

As for the data that is not being accessed by the processor currently, it overflows and is driven to the other memories.

It is possible due to the larger size of the AM which makes it more able than the cache to contain the current working set of the node which is why a large number of cache misses are contained in the node locally.

Alternative COMA Designs

There were significant latency issues in the earlier Hierarchical Cache Only Memory Architecture designs as and when there was an increase in the memory requests made.

This went up in the hierarchy and then went down in order to locate the necessary block.

This annulled the potential benefits offered by COMA in comparison to regular NUMA.

Therefore, it was necessary to create some alternative COMA designs that did not rely on the hierarchy when it came to finding a block. These alternative designs are:

- Flat COMA

- Simple COMA and

- Multiplexed Simple COMA.

Flat COMA

This is supposed to be the more recent design which comes with a fixed location directory that helps in locating a block very easily. In this particular design, there is a directory entry in the node in every memory block.

The memory blocks can migrate freely but the entries in the directory cannot.

As a result, the processor needs to interrogate the directory of the node of the block in order to find a memory block.

The directory however is always aware of the location and the state of the block, and therefore it is much easier for it to forward the request for a block to the appropriate node.

The Flat COMA design does not rely on hierarchy but can use any given high-speed network to find a block.

Since the directory of it is distributed and fixed in the nodes, any request made goes to the node that contains the directory information when there is a miss in an AM on the block.

This request is then redirected by the directory to another node if it does not have the copy of the same.

Simple COMA

Typically, Attraction Memory block relocation and replacement mechanisms are implemented by the Hierarchical COMA and Flat COMA designs in hardware.

This resulted in some sort of complexity in the system.

In order to do away with this issue, a new variety of COMA design called the Simple COMA was developed.

These machines had the ability to transfer some of the complexities to the software.

However, the common coherence actions were still maintained in the hardware for performance purposes.

A separate space is set aside by the operating system in the Attraction Memory in this particular design.

This space is used for the incoming memory blocks based on the page granularity.

The local MMU or Memory Management Unit contains only the mappings of the pages in the local node and not those of the remote pages.

When the node accesses a shared page for the first time which is already in a remote node, a page fault is suffered by the processor.

It is the operating system that locally allocates a page frame for the data that is requested.

After that, the hardware continues to work on the request which includes locating and inserting a valid copy of the block in the page in the Attraction Memory that is newly allocated in the correct state.

The remaining page is left unused until any further requests are made in the future to other lines of the page to initiate a page fill-up.

Succeeding accesses are also made to the block in order to get their mapping from the MMU directly.

However, there is no AM address tag available to check whether or not the accesses are made to the right block.

Still it is not necessary because two copies of the same block in two different nodes are highly unlikely to have the same physical address.

This is due to the fact that these physical addresses that are used to classify a block in the Attraction Memory are set up in each node separately by the MMU.

And, as for the shared data, it requires a global identity for communicating with the different nodes.

There is a translation table at each of the nodes that helps in converting the local address into global identifiers and vice versa.

Multiplexed Simple COMA

The Simple COMA machines however had a significant issue – the memory fragmentation.

Ideally, these machines set aside a memory space in page sized pieces even if there is only a single block of each page present.

This resulted in overblown working sets for the programs that run over the Attraction Memory.

This, in turn, brings in recurrent page replacements which results in high overhead of the operating system and lower performance of the system.

The Multiplexed Simple COMA, often expressed as MS-COMA, alleviated this issue.

This machine is considered to be a variation of the Simple COMA which allows mapping of several virtual pages in a particular node at the same time to the same physical page.

This mapping activity is facilitated because all the blocks existing on a virtual page are not utilized at the same time.

Also, blocks pertaining to the different virtual pages are now contained in a physical page if each of these blocks comes with a virtual page ID.

However, if two blocks belong to different pages and come with similar page offsets, one displaces the other from the Attraction Memory.

This results in the compression of the working set of the application.

Block Replacement

As said earlier, in COMA the blocks migrate but the problem is that it does not have a predetermined backup location where the block can be written if in case it is required during a replacement from the Attraction Memory.

Even if the AM block is not modified it can be the solitary copy of the memory block in the system.

Therefore, it is needed to be made sure that it is not overwritten during an AM replacement.

It is for this reason it is required by the system to keep a track of the last copy of the memory block.

Consequently, when a unique or otherwise bespoke block is shifted from the Attraction Memory, it is required to make sure that it is transferred into another AM.

Memory Overhead

Typically, in a NUMA machine all memory is allocated to the operation system or application pages.

However, in the COMA machines a part of the memory is left unallocated.

This helps in data or block migration to the AMs of the referencing nodes because it needs much less block relocation traffic.

Apart from that, this particular space also facilitates the replication of shared blocks across the Attraction Memory.

If there were no space, then it would need one block to be relocated every time a new block is needed to be moved into the Attraction Memory.

This would have a notable effect on the memory pressure.

Memory pressure is the ratio between the total size of the Attraction Memory and the application size.

If this memory pressure is 60% then 40% of the space in the AM would be available for data replication.

This means that with the increase in the memory pressure the relocation traffic also increases but along with it the number of AM misses will increase as well.

Therefore, choosing the right memory pressure for any given memory size is a trade-off between relocation traffic, the effect on the page faults, and Attraction Memory misses.

Performance Issues

Now, take a look at the different issues related to the performance of the Cache Only Memory Architecture.

Yes, there are some issues that exist even after all the development in the designs and features of the COMA machines.

However in order to understand the issues with the performance, it is required to compare the different COMA machines with other scalable and shared-memory systems.

Issues with Flat COMA

In the case of the Flat COMA machines, the memory overhead when compared with a conventional NUMA system is inequitable.

So, in order to understand its performance you will need to compare these machines with NUMA-RC, which is a NUMA system that comes with a large DRAM Level 3 cache included in each of its nodes.

This Level 3 cache is called the RC or Remote Cache because it holds remote data. It is also referred to as a network or cluster cache.

If this RC is large, it will increase the portion of accesses that are satisfied locally.

This is because it will be able to intercept those requests that would have accessed a remote node otherwise.

As it is, the NUMA-RC and Flat COMA both have the same quantity of memory overhead for each node but it is the remote cache in the former and for the latter it is an additional memory for every data application.

The issue is that the Flat COMA requires block tags for the whole Attraction Memory but the NUMA-RC needs block tags only for the remote cache.

Then, assuming that the time taken to access this memory for both the machines being the same, there may be cache misses in Flat COMA depending on the local memory hierarchy.

These cache misses typically fall under three specific categories such as:

- Cold misses, which refer to the accesses by the node to the blocks that were never accessed before

- Coherence misses, which refer to the accesses to the invalidated blocks by the coherence mechanism and

- Conflict misses, which refer to the accesses made to the blocks that are relocated from the local memory hierarchy due to overflow.

Out of these specific cache misses, the cold and conflict misses are more or less the same for Flat COMA, NUMA and MUNA-RC machines for a given application.

However, the number of conflict misses typically varies across the architectures, based on the number of such misses repaired by the local memory hierarchy.

If there is an overflow in the remote data instead and the secondary cache fits into the memory overhead, then it is more likely that the number of such conflict accesses will be high in NUMA machines and low in Flat COMA and NUMA-RC machines.

However, the NUMA machines will have the highest number of conflict misses if the remote working set overflows the memory overhead.

On the other hand, depending on the pattern of data access, the NUMA-RC machines may have fewer or higher conflict misses than Flat COMA machines.

There are also issues with replication and migration of data structures.

Usually, data structures replicate in the Attraction Memory of the Flat COMA machine as opposed to the remote cache of NUMA-RC machines.

And, the migration data structure is required to exist in only one AM at one time.

This means that the migration data weight in a given working set decides the volume of conflict accesses in a Flat COMA machine.

Ideally, it requires fitting only the replication data into the memory overhead since it will not use any additional memory of it.

It will use only the space in one Attraction Memory that will free up another.

Also, the amount of stall time of the processor will affect the performance of the Flat COMA machine since it will increase the average misses induced.

There are several reasons for it such as:

- The remote cold and conflict misses cost more in these machines

- It needs additional hops to satisfy these accesses since the Attraction Memory in the node relocates blocks in order to free up space

- The cost of remote coherence access is also more because the dirty data is not displaced frequently from the AMs which are quite large and

- The cost of average memory access is quite higher as well.

It is harder for the Flat COMA machine to use the memory overhead space well and therefore it experiences more conflict misses when it comes to replication data.

However, as for the migration data, the kind of migration behavior will determine the savings in such misses by the Flat COMA.

This saving is comparatively high when the processor accesses the data structure several times before it gives it up to another processor.

This process is called the Data Reuse Migration.

If such changes happen quite often, the savings are reduced since the misses in the transitory phase cannot be eliminated by Flat COMA.

Issues with Simple COMA

In the Simple COMA machines, the Attraction Memory space, as said earlier, is set aside even if only one single block is brought to a miss and not for the missed blocks as it is in the Flat COMA machines.

This, however, may result in memory fragmentation and degradation of its performance overall.

This is mainly the result of the page management overhead of the operating system.

The Multiplexed Simple COMA machine however resolves this issue by multiplexing different virtual pages at the same time into one single physical page.

This eventually compresses the working set.

Another issue of it is with respect to the spatial locality and the degree of access at the page level.

This is actually the part of every page that is being used. In this respect, there are two potential scenarios such as a low spatial locality when the size of the page working set is bigger in comparison to the local memory.

The second scenario consists of all other situations.

Typically, the Simple COMA machines are not as vulnerable as the Flat COMA or NUMA-RC machines to the block conflicts even though these are fewer in number in the Flat COMA and NUMA-RC machines.

Ideally, these block conflicts do not affect the performance of the Simple COMA machines because it has the ability to allocate a single page in different nodes that come with separate physical addresses.

However, the Simple COMA machines will have a low performance level only when the spatial locality is low at the page level so much that it results in an overflow of the page working set from the local memory.

The issue with the Simple COMA machines is that it consumes much of the processor time in spite of using less memory.

This is due to the fact that the operating system needs to allocate and deallocate the pages frequently.

Therefore, considering all the aspects of the Cache Only Memory Architecture overall, it can be said that, as of now, there are several issues in different areas of it.

Still, it provides the benefit of more transparent and fine-grain migration and replication of data due to its ability to adapt to the reference patterns of different applications much more dynamically.

However, considering the future development in technology and the application and architectural trends of the Cache Only Memory Architecture, the potential of it to be a viable alternative may be restricted significantly.

Another major reason to say so is the anticipated increase in the comparative cost of remote memory access in the future.

As a result, it will be more important to reduce the fraction of remote memory accesses.

Add to that, it is also required to support data migration and replication in a different way which will be much more transparent or evident to the programmer.

So, it is important to keep the cache coherence protocol as simple as possible so that it favors the design aspect.

Capturing a larger remote working set may be facilitated further with the use of larger and more complicated remote caches, and, for that, it might not even need any COMA support.

This means that the hybrid machines that mix Cache Only Memory Architecture with the comparative straightforwardness or minimalism of NUMA-RC is more likely to become the most favored design in the future.

Conclusion

So, complex as it is, this article must have proved to be useful to you to understand the different aspects and intricacies of the Cache Only Memory Architecture in a much more simplified manner.

You can now surely differentiate between a COMA and a NUMA easily and effectively.