In This Article

What is Direct Mapped Cache?

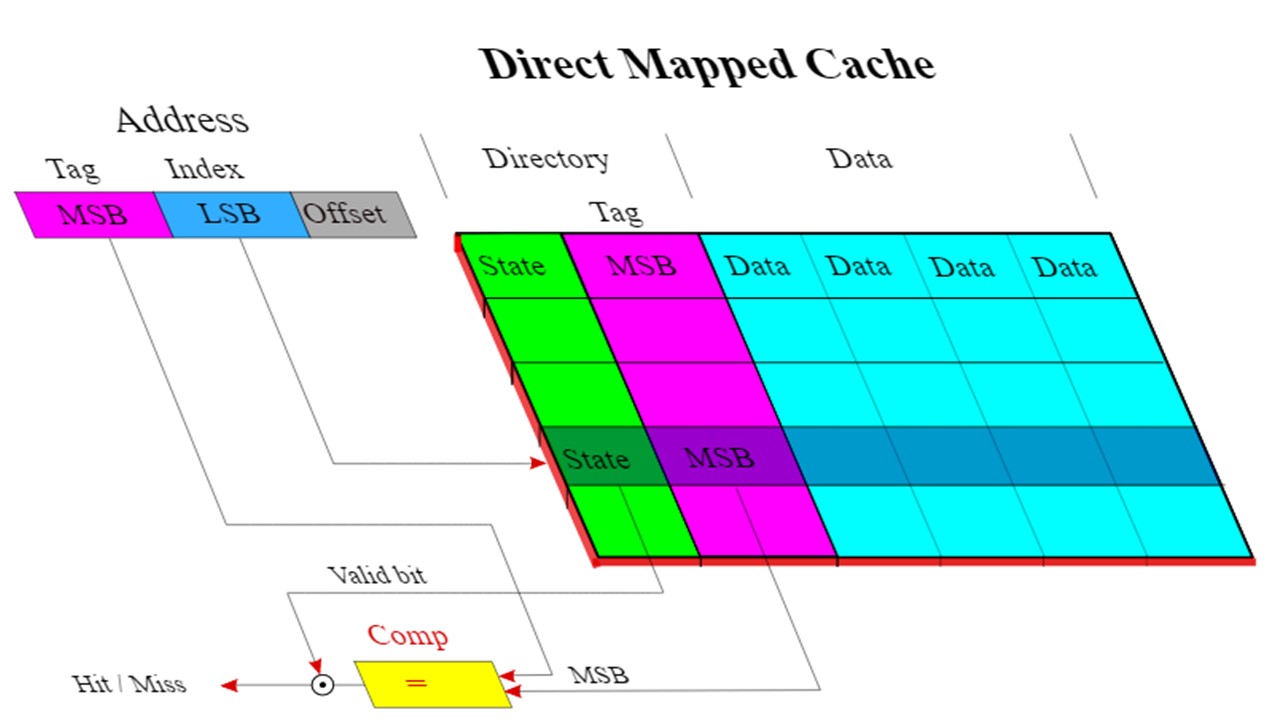

Direct mapped cache refers to the simplest form of cache memory. Here, the physical address is divided into tag and line number and block or line offset where the first two form the block number.

In this type of cache every memory block maps one specific line and only the tag field is matched when a word is searched.

KEY TAKEAWAYS

- There are three particular bits of address in a direct mapped cache namely the tag bit, line bit and word bit.

- In a direct mapped cache the hit latency is the same as the total comparator latency and multiplexer latency.

- The significant advantages of direct mapped cache include its simple operation, ease in execution, fast speed, power efficiency, simple placement, and cheap hardware requirement.

- One significant downside of direct mapped cache is that it may result in cache thrashing or two memory blocks may swap continually.

Understanding Direct Mapped Cache

Direct mapped cache is the most straightforward way to implement cache.

Actually, in a direct mapped cache, the address is split into three basic parts such as:

- Tag bits, denoted by the letter t

- Line bits, denoted by the letter l and

- Word bits, denoted by the letter w.

All these particular divisions have specific significance depending on the functions they perform, as mentioned below.

Out of these, the word bit indicates the particular word in a block memory and is considered to have minimum significance.

The line bits are up next in the order of significance and it represents the particular line in the cache where the block is stored.

And, finally, all other bits are stored as the tag along with the block which actually finds out the position of the block in the main memory.

In the process of direct cache mapping, each memory block of the main memory of the system is assigned to a specific line in the cache.

When this line is filled with a memory block, or if it is already filled, it needs a new block to be loaded.

The old block, in such situations, is relinquished from the cache.

Bits are usually taken from the address of the main memory just as it is done while locating a word inside a block in order to describe the particular line where the block is to be stored in the cache.

This means that in a direct mapped cache, every individual location in the main memory typically maps to one particular location within the cache.

This also means that there will be quite a large number of addresses that will map to that particular location in the cache.

This is mainly because the size of the main memory of the system is much bigger in comparison to the size of the cache.

The job of the cache controller in a direct mapped cache is very important and it usually plays a dual role such as:

- It chooses a word in the cache line by using two bits of the address as the offset and

- It also chooses one among all of the available lines once again by using two bits of the address as the index.

The rest of the bits of the address are stored as tag values.

Implementation

Direct mapping method is used in the direct mapped cache and it follows different steps to implement it. Here they are:

- In the first step, each of the multiplexers read the specific line number that is generated from the physical address with the help of the select lines in parallel. This means that, if the multiplexer needs to read a line number that has a total of L bits, for example, then every multiplexer also should have an equal amount of total number of select lines.

- In the second step, when the multiplexer is done with reading the line number, all of the multiplexers now must go to the line that is corresponding in the cache memory by using the parallel input lines of it. In that case, every multiplexer should have a total number of input lines that are equal to the total number of lines that are available in the memory of that particular cache.

- In the third step, the multiplexer produces a tag bit that it has selected from the cache to the comparator. This is however done by using the output line. Now, in this case, the total number of output lines available to each of the multiplexers should be equal to 1.

- In the fourth and last step, the comparator compares the tag determined and sent by the multiplexers together with the tag of the address that has been produced. However, for the sake of comparison, only one of the comparators is required wherein the size of the comparator is equal to the total number of bits in the tag. If the two tags match, there will be a cache hit or else a cache miss will occur if they do not match.

At this point, there are a few specific things that you should surely take note of.

For example, a multiplexer is able to output only a single bit on the line of output, and therefore, in order to yield an entire tag to the comparator, the total number of required multiplexers should be equal to the total number of bits that are available in that particular tag.

It is also required to keep in mind that the configuration of each of the multiplexers should enable it to read the available tag bit at a particular location.

This means that when a specific configuration of the first multiplexer is intended to yield the first bit of the tag, the configuration of the second multiplexer should be such that it produces the second bit of the tag, and so on.

As a consequence, every single multiplexer will then be configured to select the tag bit of the desired line as it is intended to and produce the output on the line.

It will then be possible for the multiplexer to send the whole tag to the comparator so that it can perform the evaluation in parallel.

Vital Results

Here are a few significant results for a direct mapped cache.

Hit latency indicates the total time taken to find out whether or not the desired word exists in the cache memory.

Typically, for a direct mapped cache, this hit latency is equal to the sum of the comparator latency and the multiplexer latency.

And, a particular block of the main memory can map to mod lines in the cache.

Based on these parameters some other results of a direct mapped cache are:

- The total number of desired multiplexers is equal to the total number of lines existing in the preferred cache.

- The total number of necessary comparators is equal to 1.

- The size of the comparator is equal to the total number of bits available in the tag.

And, depending on the address of the memory block, it can occupy only one single cache line. This cache can be outlined as an n*1 column matrix.

Placing a Block

In order to place a block within the cache, a particular set has to be determined based on the index bits that are obtained specifically from the address of the memory block.

This memory block is then placed usually in the identified set.

On the other hand, the tag is stored in the specific tag field that is related to the set.

However, it may so happen that the tag line is already occupied beforehand. In that case that specific memory block is replaced by the new data, as said earlier.

In order to search a particular word within the direct mapped cache, it is the set that needs to be recognized in the first place.

This can be done by using the index bits of the memory block address.

The tag bits obtained for this memory block address are then weighed against the specific tag bits that are related to the set.

If there is a match between the two tags, there will be a cache hit and in that case the cache block will be returned to the CPU.

On the other hand, if there is a cache miss, the memory block will be obtained from the lower memory.

Design of Direct Mapped Cache

Typically, the structure of a typical direct mapped cache is arranged in several sets and there is one single cache line in each of these sets.

This structure helps in direct mapping, a process that involves mapping a specific block of main memory of the system with only one specific line in the cache.

This line number of the cache that is mapped with the specific block is given by:

Cache line number = (Main Memory Block Address) Modulo (Number of lines in Cache).

In a direct mapped cache, the physical address is separated as follows:

- Tag line + number, which forms the block number and

- Block or line offset.

The structure of a direct mapped cache will be very clear to you if you consider this following example.

Assume that the main memory of the system is 16 Kilobytes (KB) that is arranged in four blocks of 4 bytes each and there is a direct mapped cache that is of 256 bytes and also comes with a block size of 4 bytes.

This will mean that it will need 14 bits at the least to signify the memory address exclusively because the size of the main memory is 16 KB.

Also, the total number of sets in the cache will be 256/4 or 16 sets because every cache block measures 4 bytes.

Now, considering the incoming address to the cache, it is divided into bits to obtain the Offset, Index and Tag, where each of them has different uses as follows:

- Offset – This refers to the bits that are used to find out the byte that needs to be accessed from the cache line. Typically, in the above example, there will be two offset bits since the cache lines are 4 bytes in size.

- Index – This refers to the bits that are used to find out the set of the cache. As it is in the above examples, there are a total 64 sets in the cache and since 2^6 = 64, there will be 6 index bits.

- Tag – This refers to the bits that remain which are 14 – (6+2) = 6 tag bits. These tag bits are stored in the tag field so that it matches with the address during a cache request.

Depending on these parameters, it will be determined which memory addresses will map to which particular line in the cache.

Direct Mapped Cache Formula

As said earlier, direct mapped cache is based on the simplest technique called direct mapping in which each block of the main memory of the system is mapped to only one single cache line.

Typically, the performance of the direct mapped cache will depend on the hit ratio, which it is directly proportional to.

This is given by the formula:

i = j modulo m.

Here, ‘i’ indicates the cache line number, ‘j’ indicates the main memory block number and ‘m’ represents the number of lines in the particular cache.

When a cache access is required to be made, each main memory address will consist of three fields.

Here the least significant is w bits that signify a unique byte or word in the block of the main memory of the system.

In most modern machines, you will find the address typically at the byte level.

As for the remaining s bits, these identify one of the 2 s blocks of the main memory and these s bits are interpreted by the cache logic as a tag of s-r bits. This is however considered to be the most significant part.

There will also be a line field of r bits which represents one of the m=2r lines in the cache.

Issues with Direct Mapped Cache

One of the most significant issues with the direct mapped cache is that every block of the main memory of the system is able to map only to a preset location within the cache.

This means that there will be cache thrashing, which refers to the process of two memory blocks swapping continually, if these two blocks map to the similar location in the cache and are referenced continually.

Another significant demerit of the direct mapped cache is that it is pretty more expensive in comparison to a set associative or a fully associative cache mapping.

Also, it needs greater access time in comparison to any other mapping methods.

Direct mapped cache is also not very flexible. Assuming that a specific program uses addresses like 10, 110…, then there will be a cache miss with every access and it will create a load into the cache block.

If a cache comes with 4 blocks, then direct mapping will not allow using all of those blocks which will result in much more cache miss than you would expect.

Therefore, direct mapped caches usually have a much lower hit rate which is primary due to the fact that in the set there is only one single cache line available.

This means that this set is replaced each time a new memory is referenced to it. This results in a lot of conflict misses.

Mapping the main memory to a fixed location may also result in repeated eviction in the cache line even if there are several empty blocks available in it that are either not being used or are not used very frequently.

Once again, this is due to the fact that several memory addresses will collide against the same memory block.

How Does Direct Mapped Cache Work?

The direct mapped cache is more like a table with rows and columns.

There are at least two columns in it.

One of the columns contains the data and the other one is dedicated for the tags. And, the rows signify the cache line.

The working process of the direct mapped cache involves a read admittance to the cache.

This takes the central portion of the address and is called the index. It is usually used as the row number.

At the same time, both the tag and the data are also looked up.

In the next step, the tag and the upper part of the address are compared in order to find out whether or not the line is valid and whether or not it comes from the same address range in the memory.

As for the lower part of the address, it may be used at the same time to select the data requested from the cache line, assuming that the cache line is capable of holding data for quite a few words.

During the entire working process of the direct mapped cache, data and tag access as well as the comparison is the key.

This is because it reduces the latency which is the whole idea of having a cache in the first place.

Now, the design and working process of the direct mapped cache ensures that there is only one single place in the cache where each read address can be and the data can be cached.

This leads to a significant disadvantage because there will be other addresses as well that may be mapped to that particular place.

This will eventually result in a competition with each trying to win the cache line.

It is for this reason direct mapped cache uses different types of algorithms in order to find out whether or not the data is present in the cache.

Ideally, the procedure of direct mapping ensures that only a specific block in the main memory of the system is able to map to only a specific line in the cache.

This means that the specific line number in the cache needs to be determined in the first place so that any given block can map to it.

This is what actually happens in a direct mapped cache after the Central Processing Unit or the CPU makes a memory request:

- The line number field of the address is used to access a specific line of the given cache

- The tag field of the address of the CPU is compared with the tag of the line and then

- It is stored in the cache.

Here, two things can happen. One, there can be a cache hit, which means that the two tags match with each other.

In that case, the preferred word will be found in that particular cache right away.

On the other hand, if the two tags do not match with each other, it will result in a cache miss.

Now, in that case, the desired word will have to be brought in from the main memory of the computer system.

It is only then that it will be stored in the cache together with the new tag that has replaced the old one.

What Are the Advantages of Direct Mapped Cache?

One of the major advantages of direct mapped cache is the simplicity of its operation as well as the ease in its implementation.

This means that the offsets and indices can be calculated with simple arithmetic or bit operators.

This is due to the fact that every memory address belongs to exactly one specific block memory address.

It is also the fastest, once again due to the fact that it needs only the tag field to be matched while searching a particular word.

Other significant advantages of a direct mapped cache are:

- It offers a more power efficient yet simple placement policy

- It does not needs search all of the cache lines available and

- It needs cheap hardware because only one tag is needed to be checked at a time.

And, direct mapped cache is also less expensive as compared to fully associative or set associative caches since it needs only one multiplexer and comparator.

Is Direct Mapped Cache Faster?

Yes, as said earlier, direct mapped cache is faster due to its simple design and working process that needs only one multiplexer and one comparator.

As for any given address, it is pretty quick to identify only one entry in the cache rather than several ones to find where that address could be.

However, it also causes some issues, as discussed earlier.

How Many Sets Does a Direct Mapped Cache Have?

It is the working process that usually determines the number of sets a direct mapped cache may have.

Since every address can map only to one particular location in the cache, the number of sets in it therefore corresponds to the size of the cache itself.

For example, if word addressing is used and there are 9 or 10 bits meant for the index + tag, it can be figured as:

- 9 bits -> 2^9 sets and

- 10 bits -> 2^10 sets.

In the case of direct mapped cache, the amount of blocks will be equivalent to 1.

The number of sets in this case can be determined by the formula: (Size of cache) / (Number of blocks)

Why are Tag Bits Needed in Direct Mapped Cache?

The primary need to have tag bits in the direct mapped cache is that it will supply the remaining address bits to it that will help in distinguishing between the different locations of the memory that map to the matching cache block.

How Many Tag Bits are Needed in Direct Mapped Cache?

Well, the number may vary according to the size of the main memory and therefore it is good to know how it is calculated.

For example, if you have a cache line of 4 words, an address as well as a word size of 32, and the bytes are addressable, this will make 16 bytes of cache line.

This means that the last part of the address is not needed to be carried in the cache tag.

This is because it can be constructed from the location of the byte. Therefore, this will use only 28 bits as the tag and save the 4 bits.

On the other hand, the number of bits required for storing the addresses of the blocks or individual lines in a tiny cache that can hold about 16 kilobits of data would be 2 kilobytes or 512 words since 32 bit word size is equal to 4 bytes.

And, if the size of the cache line is 4 words, this will indicate 512/4 or 128 lines in the cache.

These many lines in a direct mapped cache will use only 7 low order bits of the 32 bit address.

This means that the rest of the other 25 high order bits of the block address are to be stored in each line.

It will need 128*25 or 640 bits of address storage data over and above the 16 KiB of data. This will add up to a total of 17024 bits in the cache.

And, for a cache size of 16 Kilobytes, things will change dramatically and as such with higher values.

How Many Total Bits are Needed for a Direct Mapped Cache?

Once again, things will vary depending on the size and other parameters of the direct mapped cache. Still, here is how it should be calculated.

Assuming that it is a cache with 16 KB of data stored in 4-word blocks and it has a 32-bit address, the total bits will be the same as the address bits, i.e. 32 bits.

As you may know already that in the direct mapped cache the address generated by the Central processing Unit is divided into tag field, line field and word offset, here is the calculation for the total number of bits required for this type of direct mapped cache.

Assuming that the data memory size of cache is 16 KB and it is byte addressable where 1 word size is equal to 1 B, the number of cache lines can be calculated as:

Number of cache lines = Data memory size of cache / Data size of 1 cache line.

This is equal to 16 KB / 4 B or 212 lines.

So, the number of bits required to correspond to the cache line will be equal to log2 (212) or 12 bits.

In the same way, the number of bits required to correspond to a word in a line will be equal to log2 4 or 2 bits.

And, the number of bits required to correspond to the tag will be equal to (32 – 12 – 2) which is 18 bits.

Therefore, for a given line, 1 tag is related to one line in the case of direct mapping. So, the size of the tag memory will be the product of the number of tag bits and the number of lines which is in this case 18 * 212 bits or 72 K bits.

On the other hand, the size of the data memory, as said earlier, is 16 KB or 128 K bits.

With these figures the total memory needed for the cache will be 128 K bits + 72 K bits which is equal to 200 K bits.

The results will be different for, say a memory size of 2048 bytes with a total cache size of 64 bytes and a total of 8 blocks and so on and so forth.

Conclusion

Therefore, as you can see from the article, though direct mapped cache is considered to be the simplest form of mapping a cache, there are lots of technicalities involved in it.

It is mainly due to the direct mapping tactic but there are also other reasons to it, which are now known to you.