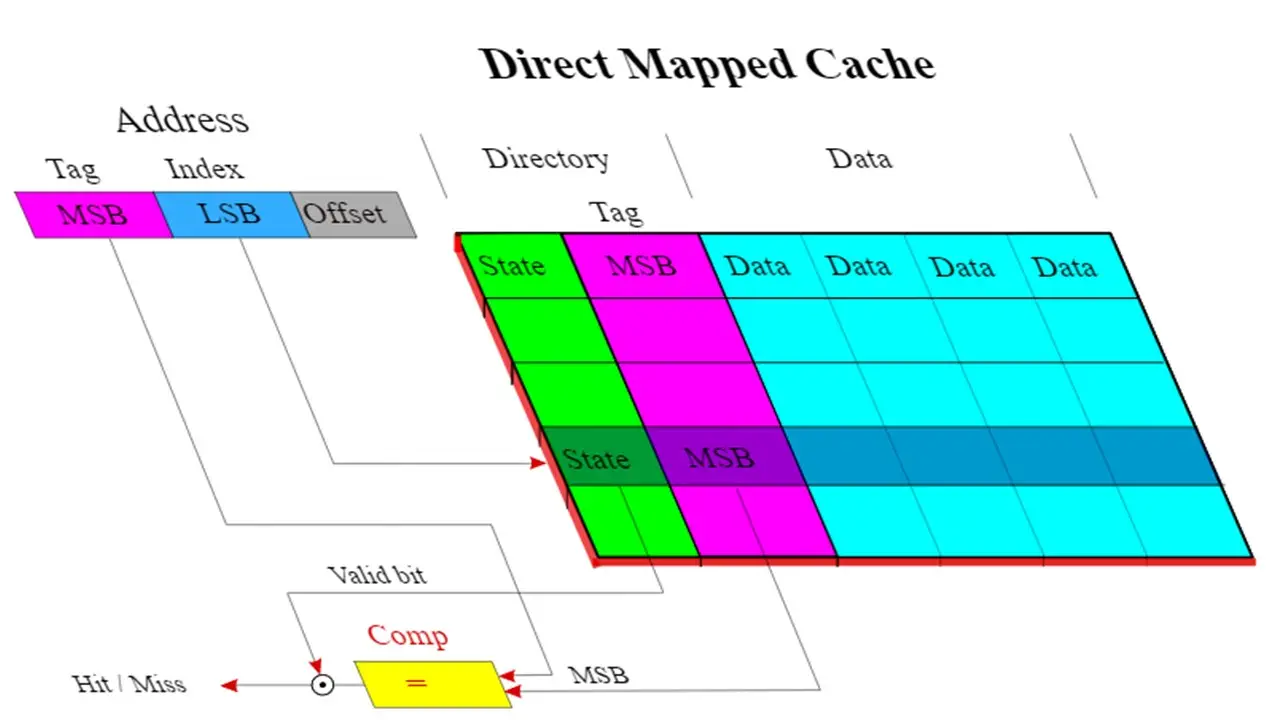

Direct mapped cache is the simplest form of cache memory. It divides the physical address into three parts: tag, line number, and block offset. The tag and line number together form the block number. In this cache type, each memory block maps to one specific line, and only the tag field is matched when searching for a word.

Understanding Direct Mapped Cache

Direct mapped cache is straightforward to implement. The address is split into three key parts:

- Tag bits (t)

- Line bits (l)

- Word bits (w)

Each part plays a specific role:

- Word bits: Indicate the particular word in a memory block (least significant)

- Line bits: Represent the cache line where the block is stored

- Tag bits: Stored with the block to identify its position in main memory (most significant)

In direct cache mapping, each main memory block is assigned to a specific cache line. When a line is filled or needs to be replaced, the old block is removed from the cache.

The cache controller in a direct mapped cache performs two crucial functions:

- Selects a word in the cache line using two address bits as the offset

- Chooses one of the available lines using two address bits as the index

The remaining address bits are stored as tag values.

Implementation

Direct mapped cache implementation follows these steps:

- Multiplexers read the line number from the physical address using select lines in parallel

- Multiplexers access the corresponding cache line using parallel input lines

- Multiplexers send the selected tag bit to the comparator

- The comparator compares the multiplexer-selected tag with the address-produced tag

Key points to remember:

- The number of multiplexers equals the number of tag bits

- Each multiplexer is configured to select a specific tag bit

- The entire tag is sent to the comparator for parallel evaluation

Vital Results

For a direct mapped cache:

- Hit latency = Comparator latency + Multiplexer latency

- Number of multiplexers = Number of cache lines

- Number of comparators = 1

- Comparator size = Number of tag bits

A memory block can occupy only one cache line based on its address. The cache can be represented as an n*1 column matrix.

Placing a Block

To place a block in the cache:

- Determine the set using index bits from the memory block address

- Place the memory block in the identified set

- Store the tag in the related tag field

If the tag line is occupied, the existing memory block is replaced with new data.

To search for a word:

- Identify the set using index bits

- Compare tag bits from the memory block address with the set's tag bits

- If tags match, return the cache block to the CPU (cache hit)

- If tags don't match, retrieve the memory block from lower memory (cache miss)

Design of Direct Mapped Cache

A typical direct mapped cache is arranged in sets, with one cache line per set. The cache line number is determined by:

Cache line number = (Main Memory Block Address) Modulo (Number of lines in Cache)

The physical address is separated into:

- Tag + line number (block number)

- Block or line offset

Example:

- Main memory: 16 KB (4 blocks of 4 bytes each)

- Direct mapped cache: 256 bytes (block size of 4 bytes)

- Address bits: 14 (minimum)

- Cache sets: 256/4 = 64 sets

Address bits are divided into:

- Offset: 2 bits (4-byte cache lines)

- Index: 6 bits (64 sets, 2^6 = 64)

- Tag: 6 bits (14 - (6+2) = 6)

Direct Mapped Cache Formula

The performance of direct mapped cache is proportional to the hit ratio, given by:

i = j modulo m

Where:

- i = cache line number

- j = main memory block number

- m = number of cache lines

Issues with Direct Mapped Cache

- Cache thrashing: Two memory blocks mapping to the same location can swap continually

- Higher cost compared to set associative or fully associative cache mapping

- Longer access time than other mapping methods

- Inflexibility: May not utilize all cache blocks effectively

- Lower hit rate due to limited cache lines per set

- Repeated evictions even with empty blocks available

How Does Direct Mapped Cache Work?

- Read access uses the index (central portion of the address) as the row number

- Tag and data are looked up simultaneously

- Tag is compared with the upper part of the address

- Lower part of the address selects the requested data from the cache line

The design ensures only one place in the cache for each read address, which can lead to competition for cache lines.

Advantages of Direct Mapped Cache

- Simple operation and easy implementation

- Fastest search process (only tag field matching required)

- Power-efficient placement policy

- No need to search all cache lines

- Cheaper hardware (only one tag checked at a time)

- Less expensive than fully associative or set associative caches

Is Direct Mapped Cache Faster?

Yes, direct mapped cache is faster due to its simple design and process, requiring only one multiplexer and comparator. However, this speed comes with some trade-offs, as discussed earlier.

How Many Sets Does a Direct Mapped Cache Have?

The number of sets in a direct mapped cache corresponds to the cache size itself. For example:

- 9-bit index + tag: 2^9 sets

- 10-bit index + tag: 2^10 sets

The number of sets can be calculated using: (Size of cache) / (Number of blocks)

Why are Tag Bits Needed in Direct Mapped Cache?

Tag bits supply the remaining address bits to distinguish between different memory locations that map to the same cache block.

How Many Tag Bits are Needed in Direct Mapped Cache?

The number of tag bits varies based on the main memory size and cache configuration. For example:

- 32-bit address, 4-word cache line, 16 KB cache: 25 tag bits

- 32-bit address, 4-word cache line, 2 KB cache: 21 tag bits

How Many Total Bits are Needed for a Direct Mapped Cache?

The total bits needed depend on the cache size and configuration. For a 16 KB cache with 4-word blocks and a 32-bit address:

- Calculate the number of cache lines: 16 KB / 4 B = 2^12 lines

- Determine bits for cache line: log2(2^12) = 12 bits

- Calculate bits for word in a line: log2(4) = 2 bits

- Determine tag bits: 32 - 12 - 2 = 18 bits

- Calculate tag memory size: 18 * 2^12 = 72 K bits

- Add data memory size: 128 K bits

- Total memory needed: 72 K + 128 K = 200 K bits

Conclusion

Direct mapped cache, while simple in concept, involves complex calculations and trade-offs. Its straightforward mapping strategy offers speed advantages but can lead to issues like cache thrashing and lower hit rates. Understanding these intricacies is crucial for optimizing cache performance in computer systems.