Shared GPU memory is a system design where the graphics card uses a portion of the computer's main RAM instead of having its own dedicated video memory. It also refers to the virtual memory used by a graphics card when it exhausts its dedicated memory, typically amounting to half of the system's RAM.

Understanding Shared GPU Memory

Integrated graphics chips that share system memory usually employ a mechanism involving BIOS settings or jumpers to determine how much RAM the graphics chip can use. This allows customization of graphics memory allocation, leaving the rest available for other components and applications.

However, this arrangement has a notable drawback - when the graphics chip uses a portion of system memory, that amount becomes unavailable for other purposes. For example, if a computer has 512 MB of RAM and the integrated graphics card uses 64 MB, only 448 MB remains for the operating system and other applications, potentially slowing down the system.

This performance hit occurs because:

- System RAM typically runs slower than dedicated graphics memory

- The memory bus must be shared with other system components

One way to mitigate these issues is by using a faster memory pool, as done in some Silicon Graphics computers, particularly the O2/O2+ variants.

This type of shared memory arrangement is called Unified Memory Architecture (UMA). Many early personal computers used shared memory for both graphics and CPU operations.

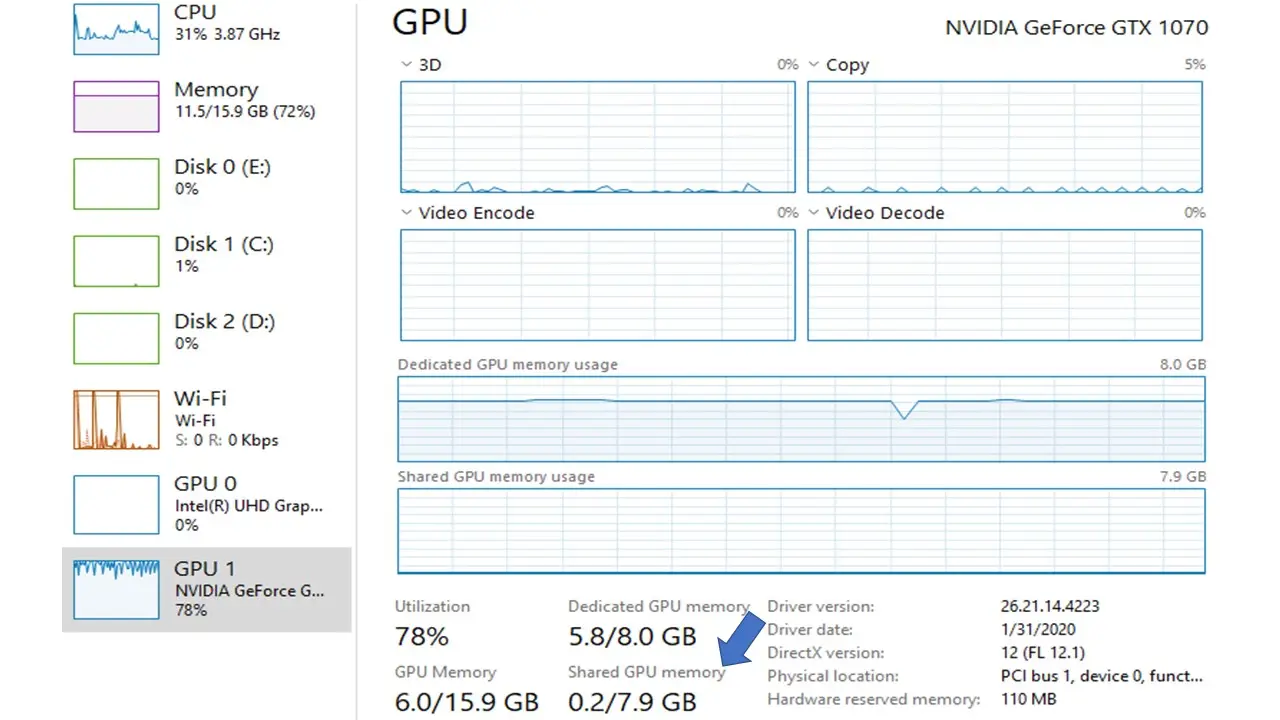

Interestingly, even systems with dedicated GPUs often allocate up to half of the main system memory as shared GPU memory. This is because RAM is much faster than SSDs or HDDs.

Key Aspects of Shared GPU Memory

Definition: Shared GPU memory is a type of virtual memory used by integrated graphics and dedicated GPUs when they run out of their own memory.

Dedicated vs. Shared GPU Memory:

- Dedicated GPU memory is physical memory on the graphics card, offering faster access.

- Shared GPU memory is allocated from system RAM, slower but more flexible.

GPU Memory Hierarchy:

- Streaming Multiprocessor (SM)

- Registers

- L1 cache/SMEM

- L2 cache

- VRAM

- System memory (RAM)

Adjusting Shared GPU Memory: Generally, it's best to let the operating system manage memory allocation. Tweaking these settings rarely improves performance significantly.

Using Shared GPU Memory

In most cases, integrated graphics with shared memory suffice for casual gaming and basic tasks. Modern processors can even handle 4K video playback with integrated graphics.

To change shared GPU memory settings in Windows, you need to access the BIOS settings. However, this is not recommended as it rarely improves performance. Instead, you can use specialized programs like Tensorflow and Process Lasso to adjust I/O priorities.

For developers, OpenCL and CUDA provide ways to utilize shared GPU memory in kernels:

- OpenCL: Use

__localmemory - CUDA: Use

__shared__memory

Performance Impact

The impact of shared GPU memory on performance depends on the amount and speed of system RAM. Generally, for gaming, dedicated graphics cards are preferred over integrated solutions.

If you're considering adding more RAM to improve gaming performance, check the Task Manager during gameplay. If RAM usage exceeds 80%, additional memory might help.

Gaming with Shared GPU Memory

While not ideal, shared GPU memory can be used for gaming, especially when dedicated VRAM is exhausted. It helps prevent game crashes when VRAM is full by allowing overflow into system RAM.

Conclusion

Shared GPU memory is a crucial component in modern computer systems, allowing for flexible allocation of resources between the CPU and GPU. While it may not match the performance of dedicated GPU memory, it provides a cost-effective solution for many users and helps prevent system crashes when dedicated memory is exhausted. For most users, the default settings managed by the operating system will suffice, and manual tweaking is rarely necessary or beneficial.