The computers, as you know today, existed even in the primitive ages, albeit in a different design.

Ideally, computers existed with its primitive designs early in the 19th century and, over time, it went on to change forms and functionalities to take the world by storm in the 20th century.

The computers you find today are quite unrecognizable from those available in the 19th century and it all started with the Analytical Engine designed by Charles Babbage, the ‘Grandfather of computers.’

In This Article

KEY TAKEAWAYS

- Stones, bones, and sticks were used by the primitive people to count things for trade.

- Before the modern computing machines there were calculating machines such as Abacus, Napier’s bones, Pascaline, and Leibniz wheel that came into existence.

- The difference engine, analytical engine, tabulating machine, differential analyzer and Mark I computers were developed henceforth.

- After that came the different generations of computers and today the world of computers is supposed to be in its fifth generation that started way back in the 1980s.

Understanding History of Computers

Before the computers existed, people used stones, sticks and even bones to count.

With the improvement of the human mind and advancement of technology over time, other computing devices evolved.

These devices were not very complex but performed mathematical computations pretty well.

Some of the most popular computing devices of the old days that are recorded in the history of computers are:

- Abacus – The Chinese invented it more than 4000 years ago. An abacus is believed to be the first computer of the world. It is mainly a wooden rack with metal rods which had beads mounted on them to perform arithmetic calculations.

- Napier’s bones – This is another manually operated calculating tool invented by John Napier. There were nine different bones or ivory strips in it that were marked with numbers. This helped in multiplying and dividing. It is supposed to be the first machine that used the decimal point method for calculation.

- Pascaline – Also called an Adding Machine or Arithmetic Machine, this machine was invented by Baise Pascal, the French mathematician-philosopher, in between 1642 and 1644. It is considered to be the first automatic mechanical calculator. It can add and subtract numbers in quick time and helped Pascal’s’ tax-consultant father in calculating taxes. It had a wooden box with a set of wheels and gears.

- Leibniz wheel – Also called the Stepped Reckoner, this machine was invented by a German mathematician-philosopher Gottfried Wilhelm Leibniz in 1673. This digital mechanical calculator was however an improvement of Pascal’s machine. The gears in Pascal’s model were replaced by fluted drums in it.

- Difference engine – It was designed by Charles Babbage in the early 1820s. This is a mechanical computer that performed simple calculations and also solved logarithmic tables. This machine was driven by steam.

- Analytical Engine – This was also developed by Charles Babbage in 1830. This mechanical computer used punch cards for input and was able to solve any mathematical problem. It could also store information in it as a permanent memory.

- Tabulating machine – It was invented by Herman Hollerith, an American statistician in 1890. This was actually a mechanical tabulator and operated using punch cards. It could tabulate statistics and record them. It could also sort information or data. This machine was used in 1890 by the US Census. The machine was manufactured by Hollerith’s Tabulating Machine Company which was later named International Business Machine or IBM in 1924.

- Differential analyzer – Invented by Vannevar Bush, this is supposed to be the first electronic computer. It was launched in the United States in 1930 and was basically an analog device. It consisted of vacuum tubes that switched electrical signals to do the calculations. The machine could perform up to 25 such calculations in a couple of minutes.

- Mark I – This machine was built in 1944 by IBM and Harvard in partnership and is considered to be the first programmable digital computer. With this computer a new era dawned in the computer world with different generations of computers starting to emerge.

There are typically five generations of computers which cover inventions from the 1940s to today.

- The first generation computers are those that were found in the period ranging between the year 1940 and 1956. These computers were pretty large, slow and expensive. The computers of this generation had vacuum tubes and their operation relied on punch cards and batch operating systems. In the input and output devices paper tape and magnetic tape were used. Few examples of first generation computers are ENIAC, UNIVAC-1, EDVAC, and others.

- The second generation computers are those that were found in the period raging between the years 1957 and 1963. These computers used low-cost transistors that were compact and needed less power to operate. These computers were faster. Magnetic cores and magnetic tapes and discs were used in these computers as primary memory and secondary memory respectively. As programming and assembly languages COBOL and FORTRAN were used in these computers along with multiprogramming operating systems and batch processing. Few examples of second generation computers are IBM 1620, IBM 7094, CDC 1604, CDC 3600, and others.

- The third generation computers came in the market later and used Integrated Circuits or ICs in the place of transistors. There were several transistors in one single IC. This reduced cost as well as increased the power of the computers. These machines were more efficient, reliable, and smaller in size. Time sharing, remote processing, and multiprogramming as operating systems were used in these computers and high level COBOL, PASCAL PL/1, and FORTRON-II TO IV were used as programming languages. Few examples of third generation computers are IBM-360 series, IBM-370/168, Honeywell-6000 series, and others.

- The fourth generation computers refer to those machines that existed in the years 1971 to 1980. These computers used VLSI or Very Large Scale Integrated circuits. These chips contained millions of transistors along with some other circuit components. This made these computers more powerful, compact, and fast, and low in cost. These computers used time sharing, real-time, and distributed operating systems along with C and C++ programming languages. Few examples of fourth generation computers are STAR 1000, CRAY-1, CRAY-X-MP, PDP 11, and others.

- The fifth generation computers are those that came into the market from 1980 till date. These computers use ULSI or Ultra Large Scale Integration technology in place of the VLSI circuits. These are microprocessor chips that comprise ten million electronic parts. In these modern computers Artificial Intelligence or AI software and parallel processing hardware are used and the programming languages are mainly Java, C, C++, .Net, and others.

Now, take a look at the timeline of the computers as well as other aspects related to it to have a much better knowledge and understanding of the history of computers.

19th Century:

1801 – A loom was invented by a French merchant Joseph Marie Jacquard. It used wooden punch cards to weave fabric designs automatically. It is assumed that all early computers used the same type of punch cards.

1821 – Charles Babbage, an English mathematician, developed a calculating machine driven by steam that could calculate tables of numbers. According to the University of Minnesota, this project was called ‘Difference Engine’ and was funded by the British government but failed due to a shortage of technology at that time. Later on he designed the Victorian-era computer called the Analytical Engine in 1838.

1848 – The first computer program of the world was written by Ada Lovelace, the daughter of poet Lord Byron and an English mathematician when she was translating a paper on Analytical Engine of Charles Babbage from French to English. It also included her own comments, annotations, and step-by-step computation description of Bernoulli numbers using Babbage’s machine.

1853 – The first printing calculator of the world was designed by the Swedish inventor Per Georg Scheutz and his son Edvard. It could calculate tabular differences and print the results.

1890 – A machine was designed by Herman Hollerith that used punch cards. According to reports, it was used to calculate the 1890 US Census.

Early 20th Century:

1931 – Vannevar Bush builds a Differential analyzer at the Massachusetts Institute of Technology. According to Stanford University, this was the first automatic, large scale, general purpose, mechanical analog computer.

1936 – A whitepaper on the universal machine was created by Alan Turing, a British scientist and mathematician. It was called the Turing machine later on when it was built. This machine could compute anything that was computable. This is supposed to be the key concept of modern computers.

According to the National Museum of Computing of the United Kingdom, Turing was also involved in developing the Turing-Welchman Bombe according to the UK’s National Museum of Computing. This was an electro-mechanical gadget that could decipher Nazi codes.

1937 – A proposal was submitted by John Vincent Atanasoff, a professor of physics and mathematics at Iowa State University, to build the first electric-only computer of the world. It did not have any cams, gears, shafts or belts.

1939 – The Hewlett Packard Company was formed by David Packard and Bill Hewlett in Palo Alto, California, in the garage of Packard, according to MIT.

1941 – The Z3 machine was created by a German inventor and engineer, Konrad Zuse. This is considered to be the first digital computer of the world.

However, this machine was destroyed in a bombing raid on Berlin during the World War II. He fled from the German capital after it was defeated by the Nazis and later on, in 1950, created Z4 which is considered to be the first commercial digital computing machine of the world.

1941 – The first digital electronic computer was designed in the US by Atanasoff and his graduate student, Clifford Berry. It was called the ABC or the Atanasoff Berry Computer.

This was the first machine in the world that could store information in the main memory. It was also able to calculate any operation in 15 seconds.

1945 – John Mauchly and J. Presper Eckert, two professors at the University of Pennsylvania, designed and built the ENIAC or the Electronic Numerical Integrator and Calculator. This device is considered to be the first general purpose, automatic, decimal, electronic, digital computer.

1946 – The UNIVAC was designed and built by Mauchly and Presper after leaving the University of Pennsylvania. They received funds for the project from the Census Bureau to build the first commercial computer for government applications and business.

1947 – The transistor was invented by three inventors William Shockley, Walter Brattain, and John Bardeen of Bell Laboratories. They designed an electric switch without vacuum and with all solid materials.

1949 – The EDSAC or the Electronic Delay Storage Automatic Calculator was developed by a team at the University of Cambridge.

This is considered to be the first practical computer that stored programs. The first program run by EDSAC was in May 1949 when it computed a list of prime numbers and a table of squares.

Later on in November 1949, the scientists at the CSIR or the Council of Scientific and Industrial Research, which is now known as CSIRO, built the first digital computer in Australia.

It was named the Council for Scientific and Industrial Research Automatic Computer or CSIRAC. This was considered to be the first digital computer of the world that could play music.

Late 20th Century:

1953 – According to the National Museum of American History, the first computer language, which was eventually known as COBOL, was developed by Grace Hopper.

It was the abbreviation of Common, Business-Oriented Language. She was nicknamed the ‘First Lady of Software’ in her posthumous Presidential Medal of Freedom illustration.

1954 – A team of programmers led by John Backus at IBM published a paper describing the FORTRAN programming language that they newly created, according to MIT. This was the acronym for Formula Translation.

1958 – The Integrated Circuit or the IC was launched by Jack Kilby and Robert Noyce. Jack Kilby was awarded the Nobel Prize for inventing this computer chip.

1968 – A model of the modern computer was revealed by Douglas Engelbart at the Fall Joint Computer Conference, San Francisco. This presentation was called ‘A Research Center for Augmenting Human Intellect.’

It included a live demonstration of the computer that included a Graphical User Interface or GUI and a mouse, according to the Doug Engelbart Institute.

This is considered to be the beginning of a computer specialized for academics to a more accessible technology for the general public.

1969 – The UNIX operating system was produced by Dennis Ritchie, Ken Thompson, and a team of other developers at Bell Labs.

This operating system made networking of different computing systems on a large scale practical. According to Bell Labs, the team continued to develop this operating system to optimize it by using the C programming language.

1970 – The DRAM or Dynamic Random Access Memory was unveiled by the newly formed Intel. It was called the Intel 1103.

1971 – Alan Shugart and his team of engineers at IBM invented the floppy disk. This allowed sharing data among different computers.

1972 – A German-American engineer, Ralph Baer, released Magnavox Odyssey in September. This is considered to be the first home game console of the world, according to the Computer Museum of America.

A few months later, engineer Al Alcorn with Atari and entrepreneur Nolan Bushnell released Pong which is considered to be the first commercially successful video game of the world.

1973 – Ethernet was developed by Robert Metcalfe, a member of the research team for Xerox. This allowed connecting different computers and hardware.

1977 – PET or the Commodore Personal Electronic Transactor was launched in the home computer market. It contained an 8-bit 6502 microprocessor with MOS or Metal Oxide semiconductor technology. It helped in controlling the keyboard, screen, and cassette player. It was widely used in the educational field.

1975 – After seeing the Altair 8080 in the cover of Popular Electronics in their January issue, Paul Allen and Bill Gates offered to write software for it. They used the new BASIC language. Later on April 4, they formed their own company, Microsoft.

1976 – Apple was co-founded by Steve Jobs and Steve Wozniak on 1st April. According to MIT, they unveiled the first computer,

Apple I that had a single-circuit board and Read Only Memory. It was designed by Steve Jobs, Steve Wozniak, and Ron Wayne. The users could add keyboards and display units to the basic circuit board.

1977 – The TRS-80 Model 1 computers by Radio Shack started its first production run, according to the National Museum of American History.

This year also saw the launch of the Apple II computer by Steve Jobs and Steve Wozniak at the first West Coast Computer Faire held in San Francisco. This computer included color graphics and also featured an audio cassette drive for storage.

1978 – The first computerized spreadsheet program VisiCalc was introduced.

1979 – WordStar was released by MicroPro International that was founded by Seymour Rubenstein, a software engineer. This is considered to be the first word processor of the world that was commercially successful. It was programmed by Rob Barnaby and included 137,000 lines of code.

1981 – The first personal computer Acorn was released by IBM which used the MS-DOS operating system of Windows. This computer came with optional features such as a display, additional memory, a printer, two diskette drives, a game adapter and others.

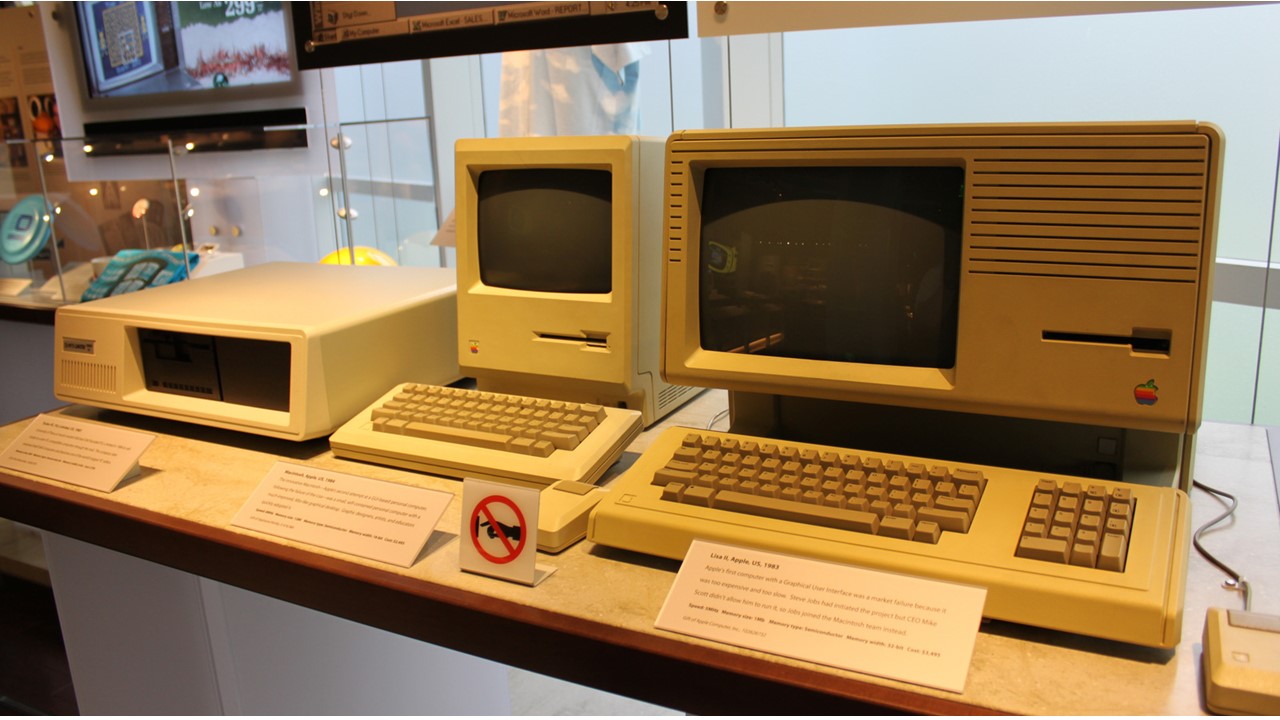

1983: The first personal computer of this world, the Apple Lisa, was launched that featured a Graphical User Interface.

Lisa was the acronym for Local Integrated Software Architecture, but it was also the name of the daughter of Steve Jobs. According to NMAH or the National Museum of American History, this personal computer also featured icons and a drop-down menu.

This year also saw Gavilan SC releasing the first portable computer of the world that was sold as a ‘laptop’ computer with a flip-form design.

1984 – In a Super Bowl ad, the Apple Macintosh was announced this year to the world, according to the NMAH.

1985 – Microsoft released Windows in November as a response to the GUI of Apple Lisa according to the Guardian. Also, this year Commodore announced the Amiga 1000.

1989 – A British researcher at the European Organization for Nuclear Research or CERN, Tim Berners-Lee submitted a new proposal for the World Wide Web. It included his ideas on HTML or Hyper Text Markup Language which builds the blocks of the Web.

1993 – The Pentium microprocessor was further developed to include the use of music and graphics on personal computers.

1996 – The Google search engine was developed by Sergey Brin and Larry Page at Stanford University.

1997 – Microsoft ends an ongoing court case with Apple accused of copying their operating system by investing $150 million in the company.

1999 – Wireless Fidelity or Wi-Fi was developed. According to a report of Wired, it then covered a distance of up to 300 feet or 91 meters.

21st Century:

2001 – The Mac OS X was released by Apple. This was later named OS X and then macOS simply. This operating system is considered to be the successor to the standard Mac Operating System. There are 16 different versions of it each having ‘10’ in its title. According to TechRadar, the first nine versions of the operating system were nicknamed after big cats and the first one was called ‘Cheetah.’

2003 – The first 64 Bit processor for personal computers was released by AMD or Advanced Micro Devices Inc. and was called AMD Athlon 64.

2004 – Mozilla Firefox 1.0 was launched by the Mozilla Corporation. This was a serious competitor to Internet Explorer, the most commonly used web browser owned by Microsoft.

2005 – Android, a mobile phone operating system based on Linux was bought by Google.

2006 – Apple launched the MacBook Pro which is considered to be the first dual core mobile computer based on Intel.

2009 – On July 22, Microsoft introduced Windows 7. According to TechRadar, this new operating system came with features like ability to pin apps on the taskbar, jump lists that are easy to access, shaking a window to scatter other windows, easier previews in the form of tiles, and more.

2010 – Apple unveils the iPad, its flagship handheld tablet.

2011 – Chromebook is launched by Google that operates on Google Chrome OS.

2015 – The Apple Watch is released by Apple. Also, Windows 10 was released by Microsoft this year.

2016 – The first reprogrammable quantum computer of the world was developed that could program fresh algorithms into the system.

2017 – The DARPA or The Defense Advanced Research Projects Agency developed a new ‘Molecular Informatics’ program. This uses molecules as computing scalable information rapidly by utilizing the unique 3D atomic structure of the molecules for processing and storage.

Anne Fischer, the program manager, said the variables of the molecules in the form of its size, shape, and even color provides a massive design space.

This offers easy ways to explore new and multi-value techniques to encode and process data beyond its current logic and digital architecture based on the 0s and 1s.

2018 – Windows 10 was released by Microsoft. On the other hand, Apple released its macOS X 10.14 that was codenamed Mojave on June 4, 2018 at the WWDC or the Apple Worldwide Developers Conference.

It also introduced the Apple iPhone XS on September 21, 2018 and in October 2018 it announced using USB-C connection in place of Lightning connection in its future models.

And, the University of Michigan announced on June 21, 2018 that it had created the smallest computer of the world in the ARM Cortex-M0 microcontroller that measured only 0.3 mm on each side.

2019 – Samsung introduced the first folding smart phones in February called the Galaxy Fold. Apple, on the other hand, introduced the iPhone 11, iPhone 11 Pro, and the iPhone 11 Pro Max in September.

The Nim programming language version 1.0 was released on September 23 and UC Berkeley and Lawrence Livermore National Laboratory developed a 3D printing technology in computed axial lithography.

2020 – This year saw the use of Artificial Intelligence as a service and use of 5G data networks. It also saw the use of Computer Vision technology in smartphone cameras for capturing better images and the use of more sophisticated cloud computing, Extended Reality and blockchain technology.

2021 – This year saw the rise of Edge Computing instead of cloud computing to store data close to the users and Quantum Computing that helped in solving issues at both atomic and subatomic levels.

Extensive use of robotics and bioinformatics technology was also seen in this year. Also, Elon Musk announced Tesla Bot, Facebook changed its name to Meta, Zuck announced Metaverse, and IBM designed the most cutting edge quantum computer chip called the ‘Eagle’ this year.

Conclusion

So, as this article points out, computers are not the invention of this century. It existed in different forms and shapes even in the primitive ages.

It is just that over time due to technological advancements and growing computing needs, the devices got better, and better, and better.