In This Article

What is L1 (Level 1) Cache?

A Level 1 cache or L1 cache memory refers to the specific area within the microprocessor that stores all information that is recently accessed by it. Also referred to as primary cache, system cache or internal cache, this is the fastest cache memory but is also most expensive and comes in a small size.

L1 cache also signifies the first cache that is accessed by the processor which is divided in two parts namely data cache and instruction cache.

KEY TAKEAWAYS

- There are two parts of L1 cache. One stores the data and is known as data cache denoted as L1d and the other stores information and is known as instruction cache expressed as L1i which can be equal to or double of L1d.

- The L1 cache is normally made of Static Random Access Memory which is faster than Dynamic RAM.

- The Level 1 cache is different from other CPU caches both in size and in the fact that it is not shared to a CPU core.

- There is a Control Logic in the L1 cache and it is located closest to the CPU cores which reduces the access latency.

Understanding L1 (Level 1) Cache

Among all different types of cache memories, the L1 cache has a zero waiting interface which makes it the fastest of them all.

Among all CPU caches, the Level 1 cache is also the most expensive one.

Though the L1 cache is the closest and fastest cache, it is also smaller in size.

This cache does not only store the files that have been accessed by the CPU recently but also stores all those crucial files that are required to be implemented immediately.

When the CPU performs any computer instruction, the Level 1 cache is the first one that is accessed by it to process the files or data stored in it.

In the modern microprocessors, the Level 1 cache is usually divided into two equal parts such as:

- One cache that typically stores all the program data, which is often called the data cache and is denoted as L1d and

- One cache that stores all the instructions to be given to the microprocessor, which is often called the instruction cache and is denoted as L1i.

However, there are some older microprocessors that use an undivided Level 1 cache on the other hand to store both the instructions for the microprocessor as well as the program data.

Ideally, the design of all Level 1 caches follows the same process where there is a control logic unit that stores all the data that is used more frequently.

As for the performance of the Level 1 cache, in the real world, it typically ranges between 95% and 97%.

However, at this point it is very important to keep in mind that the missed data always sits in the Level 2 cache.

If it was in the main memory instead of being in the Level 2 cache then the performance would have been much different.

In fact, it would have been almost double the total time required to carry out a code given that the access latency ranges between 80 ns and 120 ns.

Ideally, the Level 1 cache usually stores the decoded instructions and its smaller size makes it faster than the other caches.

History

The divided L1 cache was first used in the IBM 801 CPU in 1976. However, it was in the late 1980s that the split L1 cache became mainstream.

It however took a pretty long time to hit the embedded CPU market and it was only in 1997 it was used in the ARMv5TE.

Later on, the sub-dollar System on a Chip or SoC split the Level 1 cache in 2015 though these also had Level 2 caches as well as a Level 3 cache in the larger processors.

Build

The Level 1 cache is usually made of SRAM or Static Random Access Memory.

This is pretty fast and more expensive at the same time as compared to DRAM or Dynamic Random Access Memory.

There is a dedicated Level 1 cache in every core of a multi-core CPU and these are typically not shared between the cores as the higher level caches.

Ideally, in any specific type of cache, all of its blocks are built equally having the same size and are also associative in the same way.

The lower level caches such as the Level 1 cache however also have some other notable aspects such as:

- A lesser number of blocks

- Reduced block size

- Fewer blocks in a set and

- Pretty short access times.

It is the design and build of the Level 1 caches that ensures they have lowest latency or fastest access time possible with respect to the capacity of it in order to offer a satisfactory hit rate.

Therefore, the Level 1 caches are built with much wider metal tracks and larger transistors thereby trading off power and space for speed.

On the other hand, the higher level caches can be comparatively slower in performance because they typically require having higher capacities.

Therefore, smaller transistors are used to build them and these transistors are all packed more tightly.

Features

The features of the Level 1 cache are quite different from the other CPU caches such as the Level 2 and Level 3 caches. These are:

- The Level 1 cache is exclusive and not shared to a CPU core

- The Level 1 cache is 4 to 8 times smaller in size than the Level 2 cache and

- The size of the Level 1 cache is much smaller to the size of the Level 3 cache which can be 64 to 256 times larger in size.

Most importantly, the Level 1 cache is located inside the CPU core and not like the Level 2 cache, which is inside the processor chip but outside the CPU core, and the Level 3 cache, which is located outside the processor chip.

And, as said earlier, the Level 1 cache has one instruction and one data storage part but the Level 2 and Level3 caches are typically used for data storage only.

How Does It Work?

As said earlier, the design process followed for all Level 1 caches is the same.

It is built into the processor core and has a Control Logic as well that stores the data that is used frequently by the processor.

It upgrades the external memory only when the processor hands over the control to the other bus masters, especially at the times when the peripheral devices access the memory directly.

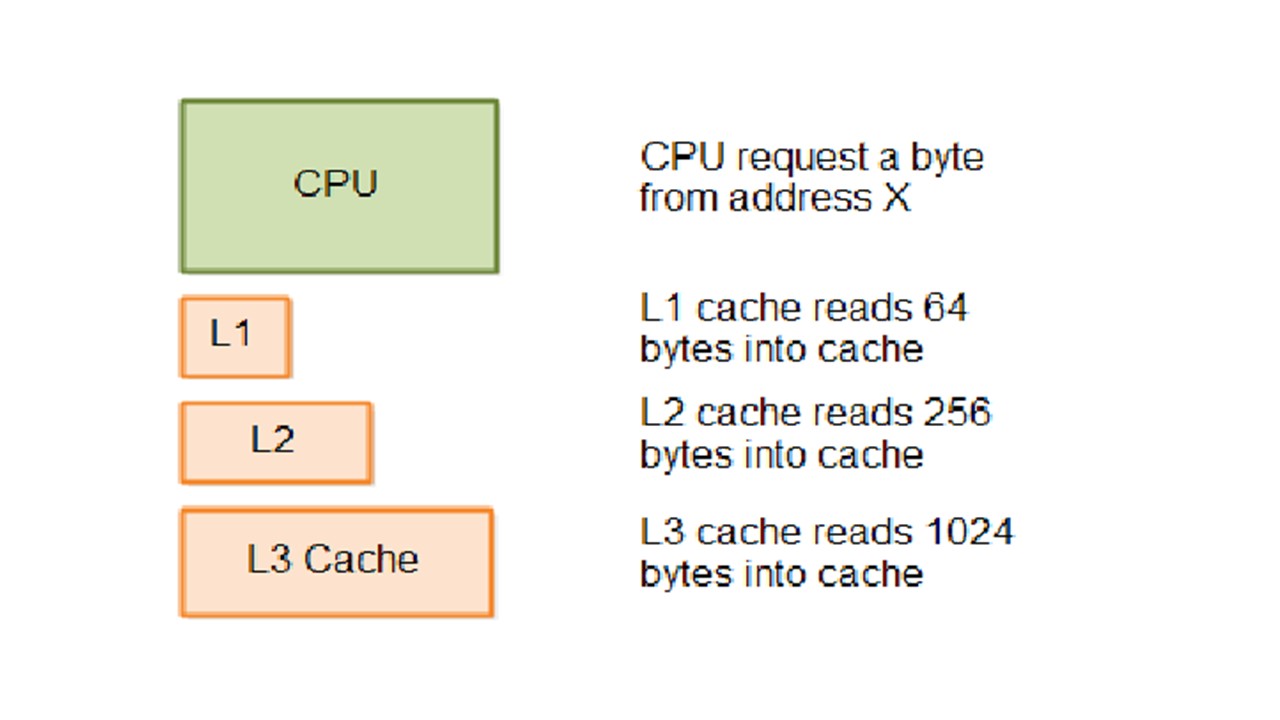

To explain its working process in the simplest terms – it involves flow of data from the RAM or Random Access Memory to the Level 3 cache and then to the Level 2 cache and finally to the Level 1 cache.

However, the processor works in the reverse way while it looks for data to execute any operation.

That is, it first accesses the Level 1 cache for the necessary data. If the CPU finds the data there then this is called a cache hit.

However, if the processor cannot find the relevant data in the Level 1 cache in the first attempt, the condition is called a cache miss and the processor then looks for it in the Level 2 cache and subsequently in the Level 3 cache if the data is not available in the L2 cache.

If the data is not available in any of the three caches, the CPU moves on to the RAM for the same and then to the SSD, if unsuccessful, as its last resort.

Therefore, you can very well guess the wait time and lag and the reason for it. That is why the Level 1 cache is so important.

Since the entire Level 1 cache is split into distinct data and instruction caches, the code fetching process of the CPU is simplified.

The separate L1i cache is tied to a separate iTLB or instruction Translation Lookaside Buffer and the data cache is tied to the dTLB.

The instruction cache holds the relevant information about the operation to be performed by the processor.

On the other hand, the data cache stores the data on which the CPU needs to perform that specific operation.

This lowers the latency and eliminates the chances of the data and code overlapping making the working process smoother, faster, and better.

L1 Cache Size

Ideally, there is no golden rule or a universal standard for the size of the Level 1 cache.

This means that you will need to check the specifications of the processor in order to find out the exact size of the L1 memory cache before you make your purchase.

However, usually, the Level 1 cache has a separate instruction cache part which is equal to or double the size of the data cache part of it.

This means that you can calculate the size of the Level 1 cache by the following formula:

Total size of L1 Cache = Size of L1i + Size of L1d.

Usually, the higher the size of the Level 1 cache, the higher and better will be the performance of the system on the whole.

Measured in Kilobyte or KB, the L1 cache size may be typically anywhere between 8 KB and 64 KB depending on the specific models.

There are lots of processors that come with an equal amount of instruction cache and data cache such as Intel Cascadelake, Intel Skylake, Intel Cooper Lake, AMD Ryzen 5 3600XT, and AMD Ryzen 9 5980HX just to name a few.

All these processors come with 32 KB of instruction cache and data cache size.

It is a common practice of the manufacturers to keep the size of the Level 1 data cache and Level 1 instruction caches the same but there are quite a few specific models of CPUs that have larger instruction caches than data caches or vice versa.

Here is the list of some of the most popular CPUs along with their Level 1 cache sizes broken into two part, instruction cache and data cache, to give you a basic idea about it:

- Intel Icelake – 32 KB and 48 KB instruction cache and data cache respectively

- Intel Gracemont – 64 KB and 32 KB instruction cache and data cache respectively and

- AMD Ryzen Threadripper 2990WX – 64 KB and 32 KB instruction cache and data cache respectively.

Ideally, it is the type of CPU in particular that will determine the size of the Level 1 cache.

If you consider some of the top-end consumer processors, such as the Intel i9 9980 XE, the size of the L1 cache in them can be as large as 1 MB.

However, these high-end processors are not only few and far between but also will cost you quite an exorbitant amount of money.

On the other hand, there are a few specific types of server chipsets, such as those belonging to the Xeon range of Intel, that also come with a huge Level 1 cache, which can be anywhere between 1 MB and 2 MB.

So, what is the size of the Level 1 cache in your computer? If you do not know, you can use the lscpu command in the terminal.

It will provide you with a lot of information regarding the processor installed in your system along with the size of the Level 1 cache.

L1 Cache Memory Location

As it is mentioned already, the L1 cache is located within the microprocessor chip and in the CPU core.

The L2 cache is also located within the CPU chip but the difference of its location with that of the L1 cache is that it is not located within the core of the CPU.

The two parts of the Level 1 cache, that is the L1i or instruction L1 cache is actually placed near the code-fetch logic and the L1d cache is actually located close to the load or store units.

In order to find the location of the Level 1 cache in your system as well as to know how it is organized along with the location of the other caches in it, you can use any one the following commands:

- Lstopo or

- lstopo-no-graphics.

As an output of any of the commands, the complete topology or infrastructure of the cache in the computer system will be displayed.

For example, here is a portion of common output with respect to any standard computer system:

L3 L#1 (4MB)

L2 L#12 (64KB) + L1d L#12 (16KB) + L1i L#12 (16KB) + Core L#12

PU L#12 (P#12)

L2 L#13 (64KB) + L1d L#13 (16KB) + L1i L#13 (16KB) + Core L#13

PU L#12 (P#13)

L2 L#14 (64KB) + L1d L#14 (16KB) + L1i L#14 (16KB) + Core L#14

PU L#12 (P#14)

L2 L#15 (64KB) + L1d L#15 (16KB) + L1i L#15 (16KB) + Core L#15

PU L#12 (P#15)

If you are lost looking at it – here it is explained in simple words.

The above output indicates that this particular computer system is equipped with a CPU that comes with a single Level 1 cache of 16 KB in size that is dedicated to only a single core of the processor.

There is a simple math behind it and if you know it, it will be much easier for you to understand the size of the L1 cache in your computer.

First, understand that there will be two types of Level 1 cache sizes reported depending on the specific command given.

For example, when you use the lscpu command, the total Level 1 cache size of the system will be reported, such as 128 KB, as in the above example.

And, on the other hand, when you use the lstopo-no-graphics command, the L1 cache will be reported for each of the cores in the CPU, such as 16 KB as in the same example.

When you divide the larger by the smaller, the quotient derived will indicate the total number of Level 1 caches in the CPU.

As for in the above example, the total number of L1 caches is 128/16 or 8.

Since there is one dedicated L1 cache for each of the cores of the processor, this number also indicates the number of cores in the processor, which is 8.

L1 Cache Speed

With respect to the access latency, it is 1 nanosecond for the L1 cache. However, the hit rate of the Level 1 cache is usually 100%.

This means that the CPU will take 100 nanoseconds to carry out an operation.

Why is Level 1 Cache the Fastest?

As mentioned already, this speed is due to the fact that the data required by the CPU to access and process while completing a task is typically in the Level 1 cache, which is accessed first by the CPU.

Also, since the Level 1 cache is in the core of the CPU, it is closer to it and therefore it is the fastest cache within the computer system.

Add to that, the L1 cache is supposed to have the lowest latency among all the other caches in the CPU.

When a cache has lower latency the chances of cache misses are reduced significantly.

This means that the required data will always be available in the system memory for the CPU to access and carry out an operation.

It is true that the computers today are designed to be much faster and more efficient and the other components in it such as the DDR4 memory and the super-fast SSDs also lower the latency significantly.

However, it is the Level 1 cache that is the primary source for the operational data and instruction for the CPU rather than the SSDs and RAMs.

In that perspective, the fast speed of the L1 cache is extremely important.

The smaller size of the Level 1 cache also promotes its speed. This means that it only stores the required data and throws the other unimportant ones to other caches and storage solutions.

Therefore, the hit rate is high and more accurate in the case of the Level 1 cache with faster access times due to its close proximity to the cores of the CPU.

It is for this closeness of the Level 1 cache to the core that eliminates the need for it to rely on the links and inter-processing between the cores, no matter how many of them are there in the CPU and how fast they are.

The Level 2 cache is farther from the cores and the shared Level 3 cache is even farther than the L1 cache which makes them much slower in performance when compared with one another.

The larger transistors present in the Level 1 cache as well as the broader metal tracks in it also adds to the speed though it comes at the cost of the power and space, as it is mentioned earlier.

Another associated factor of the speed of the L1 caches is the severance of the clock domains.

You may know that all CPUs run on their own clock generator which includes multi-GHz clocks which are also the fastest clocks within the system.

The Level 1 cache is typically synced with these CPU clocks and to each of them since the cache is exclusive for every core in the CPU.

On the other hand, the other caches, L2 cache for example, needs to serve several CPU cores which also run in a different or slower clock domain.

This makes the L2 clock slower and at the same time it has to travel a larger tile being far from the core.

Both of these add to the delay while crossing a clock domain boundary. Add to that, there are also the fan-out issues of course.

The smaller size of the Level 1 cache also means that it is coupled very tightly to the CPU core.

Therefore, every time it is accessed, it returns the data very fast.

It is due to all these features and efficiencies of the Level 1 cache the experts often refer to them as latency filters.

They opine that the Level 1 cache is onboard and the Level 2 cache is external to the processor which accounts for data propagation delays.

Experts also say that the distance of the cache from the core affects the setup times as well.

This is because for each centimeter an external cache is taken further away from the CPU, it will need a different setting for it. This causes lags.

Why Is the L1 Cache So Small?

According to the basic principle of physics, speed is inversely proportional to the size of an object.

This means that the larger the object, the slower it will be.

However, that is with respect to physical motion and you may ask what it has to do with the cache in the CPUs which does not change their position in the first place.

Well, the data does. If the components are larger, the data will have to move a larger distance through the transistors to reach its destination.

This will increase the time taken for it and will also create lags eventually.

Remember, even moving data across the components of the computer depends on the physical dominance of the conductors because the signal or data propagation in such cases is also limited to a few factors from the speed of light.

In simple terms, the time taken for an operation to get completed typically depends on the time taken by an electric signal to traverse the longest distance to and fro inside a memory tile.

Therefore, smaller the distance travelled, faster will be the performance, which is the whole intention, and therefore the L1 caches are smaller in size.

Latency and Bandwidth:

Now, considering access latency and bandwidth or throughput, both of these are crucial for the performance of the Level 1 cache.

If the access bandwidth is high, it will need a lot of read and write ports to support it.

It is quite impossible to build a large cache with all these properties. That is why the designers keep the size of the Level 1 cache comparatively small.

Larger size of the L1 cache will also increase the L1 access latency.

This will reduce the performance level drastically in due course mainly because it will slow down every dependent load.

Therefore, out-of-order execution will be much harder to hide.

Design-Cost Perspective:

Also, from the design-cost perspective, the size of the L1 cache must be smaller and faster than Level 2 cache.

Usually, the L1 caches are more expensive to build and therefore it is required to be made more efficient since it will be bound exclusively to each core of the CPU.

This means that when the size of the Level 1 cache is increased by any fixed measure, the cost of making it will be increased by double the number of times of the number of cores.

Hit rate:

Also, if the Level 1 cache is not small, fast and simple, it will reduce the hit rate which is very important for the faster performance of the CPU.

Higher throughput, in most of the times, means handling many reads and writes in a cycle or multiple ports.

This will need more power and area for the same capability as a cache with lower throughput.

This is another specific reason that the Level 1 cache size is kept small.

Speed Tricks:

The speed tricks that the L1 cache is known to use will also not work if the size of the cache was bigger.

Most of the designs use VIPT or Virtually Indexed Physically Tagged L1 however, all the index bits that come from under the page offset.

This means that it will perform like PIPT or Physically Indexed Physically Tagged L1.

The main reason behind it is the low bits of a virtual address that will be similar to that in the physical address.

This will eliminate synonyms or homonyms, which are the false hits or the same data that are stored in the cache twice.

However, with the TLB lookup it will still allow a portion of the hit or miss check to occur in parallel.

A VIVT or Virtually Indexed Virtually Tagged cache, on the other hand, does not need to wait for the TLB.

However, it needs to be undone after every change is made to the page tables.

Wait Time:

It is also not feasible to make a larger Level 1 cache because it will mean a longer wait time for the TLB result to start fetching the codes and tags and feeding these into the corresponding comparators.

Technically, it will need increasing associativity in order to keep the sum of the log2 (sets) and the log2 (line_size) less than or equal to 12.

If the associativity is increased it will lead to more ways for each set and more than or equal to fewer total sets and fewer index bits.

Therefore, a 64 KB cache must be 16-way associative and have 64 sets. However, each of these sets will have twice the number of ways.

This makes it prohibitively expensive to increase the Level 1 cache size when you consider the power consumed and latency.

That is why today it is common to have 8-way associative 32 KB L1 caches on x86 that typically uses 4 KB virtual memory pages.

The 8 tags can be obtained even by using the low 12 bits of the virtual address because these are the same as that of the physical addresses, as said before.

Once again, such type of speed hacks will happen only when the size of the Level 1 cache is small and associative enough because then the index will not have to rely on the TLB result.

Technical Aspects:

More technically, the lowest 6 bits of the address will select the bytes that are within the line while the other 6 bits will index a set of 8 tags.

This is fetched with the TLB lookup in parallel to check them corresponding to the selection bits of the physical page with that of the TLB results.

This will help in determining which of the 8 ways hold the data in the cache, if at all.

Therefore, the answer to the question why Level 1 caches are small in size is – speed.

It will take less time for decoding the control signals and the index as well as searching the cache tags in order to find out whether or not there is a cache hit.

It takes less time to drive out the result of the exact cells to the ALU that need to use them and the output as well.

Capacitance:

It will also reduce capacitance within the memory tile which is a primary reason for the exceptionally and rapidly increasing delay time due to the increase in the nodes in a single circuit drive.

It will take a much longer time to drive the address and tag inputs to a larger cache than in a smaller cache.

It is the same as driving the data buses over a larger area than a comparatively smaller area.

Therefore, like the driving wires, a smaller Level 1 cache itself will be near to the load-store data path and this will make it much easier for the instruction to access it while traveling through the pipeline.

Therefore, logically speaking, if the Level 1 cache was larger than the Level 2 cache and L2 cache would not be needed in the first place.

It will be more like storing all your data and files on a tape drive while you can store them all safely on a Hard Disk Drive.

Ideally, the perfect size of the Level 1 cache is hardly debatable.

If the size of it was the same or larger as compared to the Level 2 cache, the L2 cache then would not be able to hold more cache lines than the Level 1 cache.

This means that it will not be able to deal with the Level 1 cache misses.

Conclusion

So, with this article, you now surely have the complete and in-depth knowledge of Level 1 cache.

You now know how crucial it is among all the other different caches such as Level 2 and Level 3 cache and how it affects the performance of the CPU and the computer system on the whole.