In This Article

What is Non Uniform Memory Access (NUMA)?

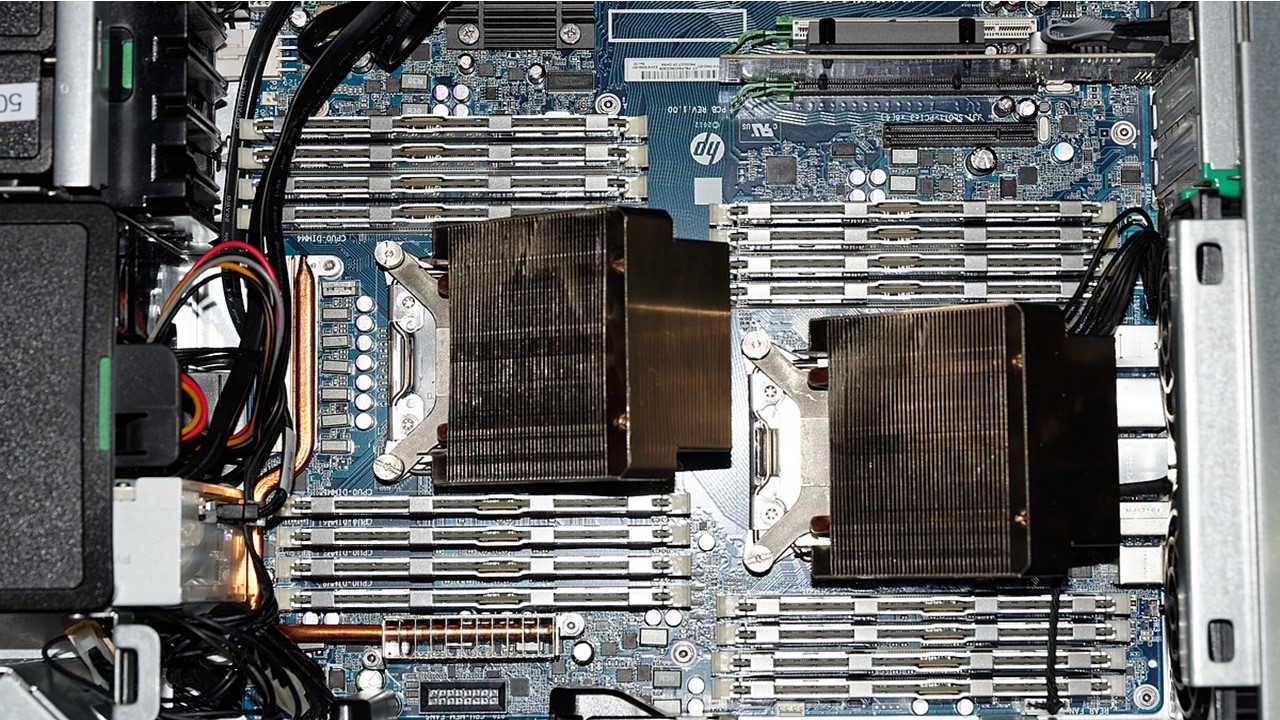

A Non Uniform Memory Access or NUMA refers to the design architecture of the memory of a computer used for multiprocessing. This actually refers to the build philosophy for configuring different processing units of a computer system.

In simple words, NUMA means the multiprocessor model or the configuration component where the processor is linked with the dedicated memory.

KEY TAKEAWAYS

- Non Uniform Memory Access architecture typically divides the memory and links them separately as the local memory to the CPU that is available in a specific NUMA node for faster access.

- The NUMA architecture resolves bandwidth and latency issues and keeps the performance level of the system high by increasing the number of channels available to the CPU to access the separate local memory banks.

- This particular architecture helps in scheduling the threads or processes by optimizing a few specific software programs to get different types of support such as Microsoft Windows 7 and Windows Server 2008, Java 7, FreeBSD, and Linux kernel version 2.5, 3.8, and 3.13.

- NUMA offers specific benefits that improve the performance of the CPU such as it brings the memory much nearer to the processor to reduce latency, helps in scaling the VM, allows maximum optimization or I/O, and offers a linear address space.

Understanding Non Uniform Memory Access (NUMA)

In simple words, Non Uniform Memory Access architecture divides the whole memory to make it available to each processor.

If the processor needs more memory, it can surely access the local memory of other CPUs in the system as well, but, in that case, the latency will be a bit higher.

The NUMA architecture attaches separate memory to the CPUs for direct and faster access as compared to accessing remote memory.

However, this local memory amount is not large and therefore there are several such clusters of CPU and memory.

These are known as NUMA Nodes.

Ideally, the Non Uniform Memory Access refers to the design of the physical memory that is typically used in the architectures of the multiprocessors where the time for memory access largely depends on the location of it with relation to the CPU.

In Non Uniform Memory Access architecture the CPU can access the local memory of its own much faster than any other non-local memory which may be local to another processor or shared between other processors.

Typically, in a NUMA system, the memory, the processors and the I/O are all clustered together in cells.

In such an arrangement, the bandwidth and latency character of the communication in the cell is fast, and it is slow out of it.

The performance of the NUMA systems to applications designed to use this feature are far better because the memory in these systems is distributed physically but is shared logically.

The performance level is still higher even for those applications that are not optimized to use this feature because the default behavior is intended to be compassionate if not beneficial.

The design still allows access to much bigger shared resources of the CPUs, the memory, and the disk space.

Non Uniform Memory Access is a clever architecture that is typically used to connect multiple CPUs or Central Processing Units together with any amount of memory available in the system to the individual processors.

Every single NUMA node is linked over a scalable network or Input/output bus.

This allows the CPU to access the memory more systematically that is related to other NUMA nodes.

With respect to the NUMA architecture, in a system there can be two types of memory that the CPU can access such as:

- Local memory – This refers to the specific memory type that is being used by the CPU in a specific NUMA node and

- Foreign memory – Also referred to as remote memory, this indicates the memory that the CPU is using which belongs to another NUMA node.

With respect to the remote memory, the term NUMA ratio signifies the cost of accessing this memory and the cost of accessing the local memory of the CPU.

If this ratio is greater, the cost will be higher as well and it will therefore take a much longer time to access this particular memory.

The major advantages of accessing local memory are therefore higher bandwidth and lower latency.

Why is NUMA Used?

Non Uniform Memory Access or NUMA architecture is used because it resolves the problem of latency and bandwidth with regards to memory access by dividing the main memory of the system and providing a portion of it to each processor.

This helps in averting the chances of the performance level dropping when several processors try to access the same memory.

The Non Uniform Memory Access architecture resolves this issue by:

- Increasing the number of separate local memory banks dedicated to each processor and

- Increasing the number of memory accessing channels which thereby enhances memory access concurrency in a linear fashion.

NUMA, just like SMP, is typically used for specific applications such as decision support systems and data mining.

This is because processing can be done by a number of CPUs that typically work on the common database in a group.

Non Uniform Memory Access is also used as an alternative approach to connect many small and cost-effective nodes through a high-performing connection.

Each of these nodes acts like an individual and small SMP system having a dedicated processor and memory.

The good thing is that the advanced memory controller helps the nodes to use the memory available on the other nodes as well.

This not only enhances the performance but also creates a single system image.

Evolution

According to Frank Dennemann, the modern system architectures are not suited for the standard Uniform Memory Access in the true sense.

This is in spite of the fact that the systems are designed specifically with that specific intention.

Therefore, the need of the hour was some sort of parallel computing which would involve a group of processors cooperating with each other to work out a given task.

This will speed up the process of the otherwise traditional sequential computation.

This is how Non Uniform Memory Access architecture evolved over time that logically allows scaling from the SMP architectures.

Typically, NUMA was commercially developed by a group of companies in the 1990s which included names like:

- Unisys

- Convex Computer, which was later renamed as Hewlett-Packard

- Honeywell Information Systems of Italy, which was later renamed as Groupe Bull

- Silicon Graphics International

- Sequent Computer Systems which was later renamed as IBM

- Data General which changed its name to EMC and later to Dell Technologies and

- Digital which was renamed several times as Compaq and then to HP and now HPE.

These companies together developed a technique that featured later in a number of Unix-like operating systems as well as in Windows NT to some extent.

However, the first commercial implementation of a Non Uniform Memory Access based UNIX system was in the Symmetrical Multi Processing XPS-100 series of servers that were designed for the Honeywell Information Systems Italy by Dan Gielan of VAST Corporation.

Software and Operating System Support

Ideally, the performance of a system that supports Non Uniform Memory Access architecture uses two specific software optimization procedures. These are:

- Data placement and

- Processor affinity.

Processor affinity allows binding and unbinding of the thread or a process to one particular processor or a set of processors.

This ensures that the thread or process works only on the CPU or CPUs that are designated rather than any of them available.

Data placement, on the other hand, indicates the software modifications that allow keeping the data and code as close in the memory as possible.

The fact that Non Uniform Memory Access primarily affects the memory access performance, it needs optimization of specific software. These are:

- Microsoft Windows 7 and Windows Server 2008 R2 support for NUMA design over 64 logical cores

- Java 7 support for NUMA-aware memory distributor and garbage collector

- Linux kernel version 2.5 for fundamental NUMA support

- Linux kernel version 3.8 for developing more competent NUMA policies

- Linux kernel version 3.13 for putting a process near the memory and handling other important cases and

- FreeBSD for NUMA architecture support in version 9.0.

The optimization of these software programs helps the Non Uniform Memory Access architecture to further facilitate in scheduling the processes or the threads close to the in-memory data.

Usage

Typically, the systems that support Non Uniform Memory Access architecture with its ability to access the whole memory directly allow using the 64-bit addressing scheme more efficiently.

It is used for most of the major server-side applications as well as writing applications for the games.

It is all due to the fact that the high-performing software is very easy to use with this type of memory architecture.

Features

The Non Uniform Memory Access architecture comes with some noteworthy features that help it to enhance the performance of the CPU.

It allows the memory to be closer to the processor than the others which helps to compensate for any effects due to memory latency.

It also helps in scaling the VM for huge amounts of memory. And, most importantly, the NUMA effects of the devices allow maximum I/O optimization.

Another notable feature of NUMA is that it provides a linear address space. This feature helps all the processors to address all memory directly.

This particular feature also helps in exploiting the 64-bit addressing especially in the more sophisticated scientific computers.

The distributed memory feature also provides other benefits such as:

- Easier programming

- Faster data movement and

- Less chances of data replication.

Also, the FMS feature resolves all issues related to the programming standard since it provides a lot of different useful tools that are handy across the entire Non Uniform Memory Access architectures.

How Many NUMA Nodes a CPU Can Have?

Though you should focus more on the NUMA topology or how the nodes are connected and not on the number of NUMA nodes in your CPU, here is a detailed answer to your question however.

Sometimes, people think that the number of NUMA nodes in their system is equal to the number of CPUs or sockets.

Well, it is not always true. In order to understand this, you must understand a few other things first.

You may find some systems have an equal number of Non Uniform Memory Access nodes in the CPU as the number of sockets they have.

However, it may not always be that way. It primarily depends on the architecture of the CPU, especially the design of the memory bus.

If the system comes with two sockets or CPU, each of them may have its individual memory that each can access.

Also, it must be able to access the memory of the other which will however take more CPU cycles or longer time than accessing its own or local memory.

Ideally, the NUMA nodes state the specific part of the system memory that is local to a particular CPU.

You can check the number of nodes in your system and the Non Uniform Memory Access status by using commands like numastat or numactl—hardware.

Apart from the number of NUMA nodes, it will also show other things as well such as:

- The amount of memory in every NUMA node

- The mount of it is used and free

- The NUMA topology which shows the distances between each node in terms of relative latencies of memory access.

So, the number of CPU or sockets does not mean there is an equal number of a NUMA node in your system. For example:

- The AMD Threadripper 1950X comes with one socket but with 2 NUMA nodes and

- The dual core Intel Xeon E5310 system comes with two sockets but 1 NUMA node.

However, if you consider a single processor chip, irrespective of the number of cores it has, it will represent one or more SMP CPUs.

These can access the same memory directly.

The Non Uniform Memory Access architecture comes in only when there are several processor chips in a single computer system but they do not use the physical memory resources completely that are available in the system.

In such a configuration, every processor chip can be considered to be one single NUMA node.

This is because each of the multiprocessor chips is connected physically only to a portion of the memory of the system through a high speed link.

A slower system bus can access this memory that belongs to another processor chip.

Does NUMA Enhance Performance?

Non Uniform Memory Access memory architecture used in multiprocessing computers surely enhances the performance of the system.

This is because in such systems the CPU is linked directly to its own local RAM and this particular architecture allows much faster access to the memory by the processor.

Generally, access to remote memory is slower but possible.

However, with NUMA implementation the cache coherent feature as well as the memory controllers on processors are incorporated since all cores of a physical processor belong to one single NUMA node.

Moreover, the implementation of the Cluster on Die or COD technology by Intel in a few Haswell processors enables the CPUs to divide the cores into several Non Uniform Memory Access domains.

The operating system can make the best use of them accordingly.

As for the UMA or Uniform Memory Access systems, there is normally a single CPU that can access the memory of the system at a time.

This typically results in a significant drop in the level of performance especially in the SMP systems.

This degradation in performance is even more severe when there is a larger number of processors in the system.

Typically, the most significant factor of NUMA that enhances the performance of a system over UMA is that the CPUs in this memory architecture typically are assigned with their own local memory.

They can access this memory separately from other processors in the system.

How to Check NUMA?

You can check whether or not the Non Uniform Memory Access feature is enabled in your system from the dmesg.

Typically, the NUMA feature will not be enabled if there are no records in the dmesg for its initialization during the boot up process.

However, it is possible that sometimes it may not show even if the Non Uniform Memory Access feature is enabled in your system.

This may be the result of the overwriting of the NUMA related messages that are contained in the kernel ring buffer.

In such situations, you can check whether NUMA is enabled or not in your system by following a few simple steps.

Typically, if NUMA is disabled in the BIOS, you can verify it by using the commands such as:

- numactl –show and

- numactl –hardware.

It is needless to say that it will not show the multiple nodes.

Therefore you can verify whether or not NUMA is enabled in the BIOS or the server does not support it, from the dmesg.

In such cases it will show a specific message saying ‘No NUMA configuration found’ and it may even fake a node.

Sometimes, disabling the ACPI or the Advanced Configuration and Power Interface will also disable the Non Uniform Memory Access feature at the same time automatically.

Therefore check that the ACPI is not disabled by the grub.conf kernel parameter.

How Can We Identify if NUMA is Enabled?

Normally, Non Uniform Memory Access has to be enabled in the Basic Input Output System or BIOS.

However, at times you may not know whether or not it is enabled or not.

If NUMA is enabled in the BIOS, then you can write a command ‘numactl –hardware.’

This will list the inventory of all those nodes that are available on the system.

How to Enable NUMA?

You will need to enable Non Uniform Memory Access before you enable the DPDK or the Data Plane Development Kit in your deployment.

The steps to follow to enable NUMA are:

- Verifying the Non Uniform Memory Access nodes on the host operating system

- Including the class to cluster.<NAME>.openstack.compute

- Setting the parameters in cluster.<name>.openstack.init on every compute node and

- Proceeding with additional environment configurations if you are making an initial deployment or re-running the salt configuration on the Salt Master node if you are making changes in your current environment.

In the end, you will need to reboot for the changes to take effect.

However, remember that if you want to set different values for every compute node you will need to define them in cluster.<name>.infra.config.

Should NUMA be Disabled?

You may wonder while using an application which is not NUMA aware whether or not you should disable Non Uniform Memory Access.

Well, for that you will first need to consider the possibilities. In that context, your application may be either of the following:

- It is simply not multithreaded or

- It is multithreaded but is not data parallel which means it does not use the threads to work on the same problem at the same time.

In such situations, you can attach the app to one of the Non Uniform Memory Access nodes.

You can use several tools or menu commands for this such as the “Set Affinity…” context menu command in the Windows Task Manager.

However, if the application or program is data parallel, you can attach it to one NUMA code and still use half of the process cores available.

In specific systems, expensive or inexpensive, where remote memory access latency is higher in comparison to the local access but the bandwidth is not, the impact will be minimal, even if you do a lot of processing on every memory value.

On the other hand, when you disable Non Uniform Memory Access in these systems, it will result in a fine-mesh intersperse of both NUMA domains.

This will mean that the application will perform poorly since half of the total memory access will be remote and the other half will be local.

Ideally, enabling Non Uniform Memory Access will not harm the performance at all, even if it does not do any good.

This is mainly because it is the operating system that will manage the accesses of an application that is not NUMA aware.

Therefore, it may be on the same node or across the Non Uniform Memory Access nodes. It will mainly depend on a few specific factors such as:

- The current work pressure on the operating system

- The CPU you are using and

- The amount of memory you are using.

The operating system, no matter what, will try to find out the data fast.

However, if you disable Non Uniform Memory Access the operating system will not know whether or not it should even try to keep the execution CPU of the application close to the memory.

So, you better not disable the Non Uniform Memory Access if you want the operating system to perform just the way it should.

Automatic NUMA Balancing

Ideally, Non Uniform Memory Access is an architecture modification that is designed to address the physical limitations in the hardware when there is a need for a lot of memory and CPUs.

The most significant limitation of all is the lower communication bandwidth between them.

There are several nodes in this configuration and each of them holds a subset of the CPUs and memory.

The speed of accessing the memory is decided by its location with respect to the CPU.

And, the application threads accessing the data determine the performance of the workload that is local to the thread of the CPU that it is executed on.

Automatic NUMA Balancing moves data on demand to the local memory nodes to the CPU that accesses that data.

This boosts the performance dramatically while using the Non Uniform Memory Access hardware depending on the workload.

Implementation of the automatic NUMA balancing typically happens in three basic steps. These are:

- Scanning the portion of the address space of the task by the task scanner once in a while and marking the memory to force a page fault while accessing the data the next time. This is called the Non Uniform Memory Access Hinting Fault.

- Depending on this particular fault, the data is moved to the memory node related to that task accessing the memory.

- It is the scheduler here that groups the tasks sharing the data in order to keep the task, the memory and the CPU being used together.

Typically, handling page fault and data un-mapping incurs overhead but it is offset by the threads that access the data related to the CPU.

As for the configuration, static configuration is used to tune workloads on the Non Uniform Memory Access hardware.

In this, the memory policies are set with three parameters namely, numactl, taskset or cpusets.

Also, special APIs are used by the NUMA-aware applications.

However, automatic NUMA balancing should be disabled in situations where the static policies are already created because the data access in such situations will be local.

The numactl–hardware will indicate whether or not it supports Non Uniform Memory Access and, if so, it will display the memory configuration of the system.

Depending on the session in hand, the automatic NUMA balancing process can be enabled or disabled simply by writing 1 or 0 to /proc/sys/kernel/numa_balancing.

Writing 1 will enable the feature and writing 0 will disable it.

The kernel command line option to enable or disable the feature permanently is: numa_balancing=[enable|disable].

When you enable automatic NUMA balancing, you can configure the behavior of the task scanner as well.

This scanner will balance the overhead of automatic NUMA balancing with the amount of time taken to make out the best placement of the data.

There are some specific kernel command lines to use for such configurations, each of which has different implications such as:

- numa_balancing_scan_delay_ms – This indicates the CPU time taken by a thread before the data is scanned. Being a short-lived process it averts creating overhead.

- numa_balancing_scan_period_min_ms and numa_balancing_scan_period_max_ms – This controls the frequency at which the data of a task is scanned. It however depends on the locality of the faults which determines the rate at which scanning will increase or decrease. The minimum and maximum scan rates are controlled by these settings.

- numa_balancing_scan_size_mb – This determines the size of the address space to be scanned at the time the task scanner is active.

Finally, monitoring is also an important step since it assigns the metrics to the workload and also measures the impact on the performance by using the automatic NUMA balancing enabled and disabled feature.

Profiling tools are also used for monitoring local as well as remote memory accesses, provided the CPU allows such monitoring.

When it comes to automatic NUMA balancing the entire activity can be monitored with these parameters in /proc/vmstat:

- numa_pte_updates – This indicates the number of base pages marked for Non Uniform Memory Access hinting faults.

- numa_huge_pte_updates – This indicates the number of transparent big pages marked for Non Uniform Memory Access hinting faults. It also helps to calculate the total marked address space in combination with numa_pte_updates.

- numa_hint_faults – This shows the records of the number of trapped Non Uniform Memory Access hinting faults.

- numa_hint_faults_local – This indicates the number of hinting faults to the local nodes. It also helps in calculating the percentage of remote versus local hinting faults in combination with numa_hint_faults. A high percentage indicates that the workload is about to converge.

- numa_pages_migrated – This shows the records of the number of pages moved around because those were misplaced.

The impact of automatic NUMA balancing on each workload may vary but it will simplify tuning the workloads which will result in a high performance of the NUMA-aware machines.

Therefore, with all that said and explained Non Uniform Memory Access can be considered to be a special memory architecture that is highly likely to be a component that the engineers look for.

The primary reason behind is its ability to enhance both the speed and performance of the processor.

This architecture will help them to incorporate more and more processors together in a single computing system since the tech market today looks for greater and quicker performance.

There is no doubt that this particular memory architecture will make the modern system faster and faster.

Conclusion

Though a bit complex matter, after reading this article you surely have gained some knowledge about Non Uniform Memory Access.

Now you will surely not wonder what the acronym NUMA means and how exactly it affects the workload and the performance of the system on the whole.