In This Article

What is Shared GPU Memory?

Shared GPU memory refers to the design of a graphics card which does not come with its own exclusive video memory but shares that of the RAM.

It also refers to the measure of virtual memory used by a graphics card when it runs out of its dedicated memory. Typically, this amounts to half the amount of the RAM.

KEY TAKEAWAYS

- Shared memory is found in most of the integrated graphics cards to reduce its cost and weight as well as the impediments in the design of the motherboard.

- The amount of system memory required to be shared by the graphics card to perform is typically determined by the BIOS or a jumper setting.

- Shared GPU memory is good in the sense that it will allow the game or an app to continue running even when the Video RAM is full of data.

Understanding Shared GPU Memory

The integrated graphics chips that share the memory of the main system usually come with a particular mechanism.

This mechanism involves some kind of a jumper setting or the BIOS to determine the amount of system memory required by the graphics chip to perform.

This means that the graphics system can be customized so that it uses only that much amount of RAM that is required by it, leaving the rest of the memory for other components and applications to use.

Amazing as it may sound, there is a notable side effect of such an arrangement.

That is, when the graphics chip uses a portion of the system memory for its own needs, then that specific amount of RAM invariably becomes unavailable for any other part to use.

In simple math it means that if your computer is equipped with 512 MB RAM and the integrated graphics card is supposed to use 64 MB for its own operations, it will consume that much.

In that case, the operating system of your computer and other users will effectively have only 448 MB of RAM available to use.

This will eventually cause your system to operate at a much slower rate.

This is because the main RAM of the system typically runs much slower than the dedicated memory of a graphics card.

Also, when the memory bus needs to be shared with the remaining users of the system, it will result in additional contention.

This happens when everything is automatic or is taken for granted. However, there is one way to resolve the performance issues and enhance the performance.

It is by boosting up the graphics used by using a faster pool memory as it is done in a few of the Silicon Graphics computers of the Silicon Graphics Inc, especially the O2/O2+ variants.

Though the system memory is shared between the graphics cards and other components of the computer, such sharing is performed only on demand.

It is applicable even when pointer redirection communication is required between the graphics subsystem and the main system.

This type of arrangement is typically called the UMA or Unified Memory Architecture.

Most of the personal computers earlier typically used a shared memory arrangement with the graphics hardware and the CPU.

In these computers only one single bank of DRAM was used for the program and the display both.

Actually, irrespective of having a dedicated or an integrated Graphics Processing Unit in your system, the operating system will typically use as much as half of the main system memory as shared GPU memory.

It is true even when there is a lot more memory available on the Solid State Drives and Hard Disk Drives.

The simple reason behind this is that it is much easier because the RAM is much faster in comparison to the HDD or an SSD.

It might seem very strange to the uninitiated but it is true and there is quite a logical explanation to that.

However, if you want to have a better understanding and dive deep into it, it is better to deconstruct the entire thing into four different parts.

First – What is shared GPU memory actually?

Well, fundamentally, it is a kind of a virtual memory which is used by the integrated graphics, as well as the dedicated graphics chip when it runs out of its own memory.

Therefore, shared GPU memory is a type of a VRAM or Video Random Access Memory which is distinctly different from a dedicated GPU memory.

Second – Difference between dedicated and shared GPU memory.

A dedicated GPU memory is a physical memory located on the graphics card itself.

Dedicated GPU memory is close to the core chip of the GPU and therefore can be accessed much quickly without needing to go through a series of buses and PCIe via the motherboard to access the main system RAM.

And, it is usually a high-speed memory module of GDDR or HBM type and is used normally for rendering apps, software, and games amongst a lot of other things.

On the other hand, a shared GPU memory is taken from the main system memory and is therefore ‘sourced.’

It is virtual memory which actually signifies a reserved area or an allocation of the main system RAM.

This is used as VRAM when the dedicated GPU, if you have any in your system in the first place, is full. And, the iGPU or the integrated GPU will use this memory all the time.

The shared GPU memory is however much slower in comparison to the VRAM or dedicated GPU memory because it is essentially a RAM. Therefore, if the GPU needs to use it, the performance is bound to take a hit.

Third – GPU memory hierarchy.

At this point, it is good to know a bit about the GPU hierarchy which is as follows:

- Streaming Multiprocessor or SM

- Registers, which are the fastest memory in a GPU that are not shared

- L1/SMEM, which refers to the L1 cache that is shared across all threads in an SM

- L2 cache, which is shared across all streaming multiprocessors

- VRAM, which is the dedicated memory in the GPU and

- System memory or RAM, which is a DRAM which is used as shared memory when the buffer of the GPU VRAM is full.

Fourth – Should you increase or decrease the shared GPU memory?

Now, if you are wondering whether or not you should tweak the setting to increase or decrease the shared GPU memory, the answer is – it all depends on the setup, really.

If your system comes with a dedicated GPU, then it is better to leave things as it is because it will probably be the best thing to do.

It will not use the shared GPU memory at all, depending on the VRAM capacity and the particular graphics card.

There will not be any added performance issues apart from frame drops when the GPU VRAM buffer is full or spikes when push comes to shove and the operating system needs to resort to sharing memory.

On the other hand, if your system comes with an integrated graphics chip in it, you may make some adjustments to increase or decrease the amount, but then again, it is recommended that you avoid doing it.

This is because all modern operating systems are designed to manage and allocate memory in the best possible way.

Therefore, you will be better off if you let your operating system do the needful.

Moreover, half of the main system RAM will already be used by the iGPU, as said earlier, and therefore your workload will hardly need additional VRAM or shared memory without using the main system RAM to operate.

Ideally, you will notice an impact on the performance while allocating additional shared GPU memory for use only when you have a simple application that typically uses a lot of resources of the GPU instead of the CPU.

How to Use Shared GPU Memory?

Now the question is, whether or not you should use the shared GPU memory, and, if so, how exactly.

As such, in most of the cases, an integrated graphics will do just fine. It will allow playing casual games and work on the Adobe programs easily.

And, if you have a fairly powerful modern processor, it will be enough to handle 4K videos as well.

Therefore, unless there is any specific need for using a dedicated graphics memory, you should be benefitted adequately by the shared memory of the integrated graphics such as in terms of enhanced battery life.

As you may know already, the shared GPU memory is borrowed from the entire amount of system RAM available RAM.

It is used only when the system runs out of the dedicated GPU memory.

The operating system does it all when it needs to tap on the main system RAM to enhance the performance since it is faster than the SSDs or HDDs.

However, to use it, you may even change the shared GPU memory value in your Windows system, but mind it, this is not as simple a process as you may think it to be.

All you have to do is look for the BIOS of Basic Input/Output System settings and do the necessary changes if you want to adjust or disable the shared GPU memory feature.

Remember, such changes are not recommended because it will not perk up the performance of the system much in most workloads.

However, if you really need it, or your fingers are itching, you can use specific programs like Tensorflow and Process Lasso to change the I/O priority and set it higher.

In an OpenCL kernel, the __local memory will allow you to use the shared GPU memory across a block of GPU threads.

On the other hand, in the CUDA kernel or a Compute Unified Device Architecture, the command is __shared__.

You can even configure the size by using a host command. You have to give a ‘cacheConfig’ command in the host code which is ‘cudaFuncCachePreferShared.’

This will allow the GPU to configure the size of the shared memory before launching for that kernel.

It will decrease the L1 cache size and use the maximum available __shared__ memory for each thread block of the GPU.

Before you start making the tweaks, here is the warning, once again. The side effect of such tweaks is mainly a reduced performance.

This is because reducing L1 cache will result in less local or global caching which will affect the performance, depending on the type of data.

Also, increasing shared memory in each thread block will mean that there will be fewer block in-flight which will cause less latency hiding, which, in turn, increases the latency and lower the performance level.

Does Shared GPU Memory Increase Performance?

Typically, it depends on how much memory you have in your system and how fast it is.

The more the memory and faster it is, the better will the performance of the system while the memory is shared by the GPU.

However, if you consider it in terms of gaming, playing games with an integrated graphics card is not recommended in the first place because the results are terrible.

Therefore, you may add more RAM to your system if you want an enhanced performance while playing games, but that will also depend on other vital factors such as the graphics card itself as well as the processor.

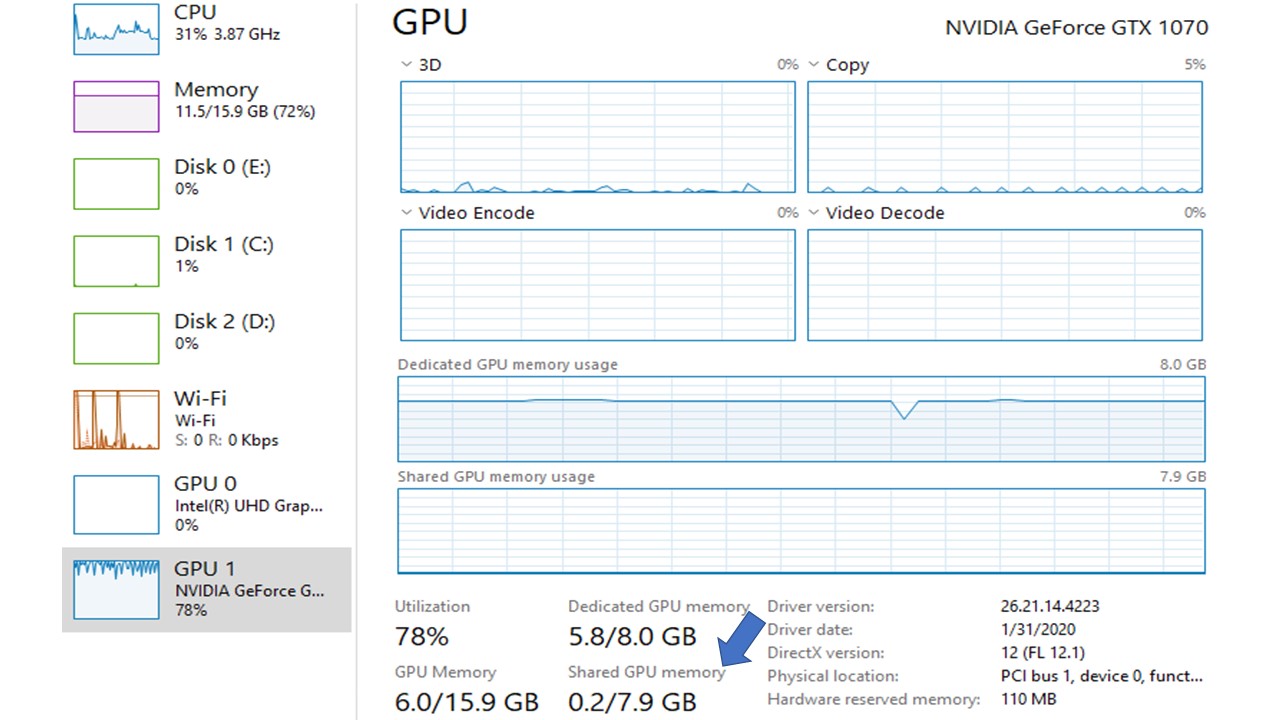

If you want to know whether or not you really need to add more RAM to your system to improve the gaming performance, run the best graphics intensive game and check the Task Manager while it is running.

If you find that 80% of the RAM is being used, you will really need to add some more RAM to your system.

However, make sure that you maintain a perfect balance between the graphics card, processor and the RAM itself while doing so.

It is certainly not a smart decision to add more than 8 GB of RAM in a system that is powered by an Intel i3 processor.

Technically speaking, shared GPU memory may or may not increase the performance of your system, but overall, it will not.

Even if you increase your RAM size, it will still be the RAM and it will theoretically increase its size only. It will still be the second priority of the GPU to use.

Moreover, the performance of the GPU largely depends on its own resources and not on the system memory which takes a longer time to access as well. Its performance will vary depending on how fast the cores of it are clocked.

Can You Use Shared GPU Memory for Gaming?

Yes, you can, especially when your game starts to lag after playing it for some time.

This happens mainly because the memory share is lower.

Typically, in order to play a game and have a wonderful gaming experience you will need both, a dedicated video memory as well as the shared system memory.

It is true that if only there is an adequate amount of VRAM for the operating system to use, the shared GPU memory will not be included.

However, in the absence of a shared GU memory, the game will not run continually.

It will help the processor to use the shared GPU memory readily when it is needed.

Is Shared GPU Memory Good?

Yes, it is because one of the most significant benefits of using a shared GPU memory is that it will allow an app or a game to continue running even if the VRAM buffer of the GPU is filled with data.

The shared GPU memory will fill the RAM with the additional data that cannot be stored in the VRAM buffer.

If your system or graphics card does not have a shared GPU memory, then the game, app, or the system would crash as soon as the VRAM buffer is filled with data.

As you may know already most of the graphics tasks are done by the graphics card of your system.

Therefore, there is no doubt that it is a sort of pass-through buffering. This keeps the record of what type of tasks are being performed.

It is also a kind of a shared area that is used by the Central Processing Unit to carry out tasks such as analytics and physics while rendering.

Therefore, make sure that you use a system that comes with a shared GPU memory at least.

However, for an enhanced and uninterrupted performance with no image stutters or lags, it is better to have a dedicated GPU memory with it as well, no matter what others say.

Conclusion

Shared GPU memory is the virtual memory of the main system RAM used by the GPU and the modern operating systems are optimized to share it.

As the article points out, you really do not need to do much to use it to increase the performance of your system.