In This Article

What is Byte?

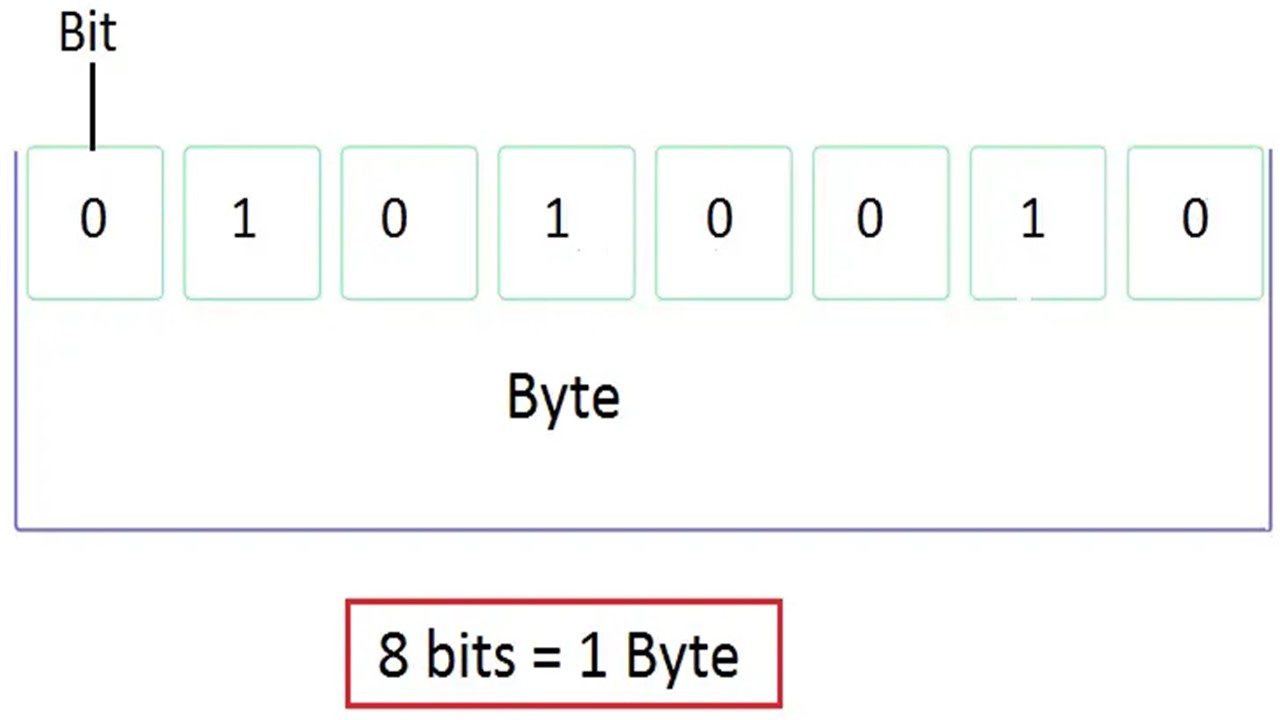

A byte refers to the unit of measurement for data storage and digital information used for processing by the computer system.

Historically, it implies the number of bits used in a computer to encode one single character of text. In simple terms, it is the unit of memory data.

Technically, a byte refers to the series of binary bits in a sequential data stream used in the data transmission systems.

It is usually equal to 8 bits, but can also be equal to 7 bits, depending on whether or not it needs parity or error correction.

KEY TAKEAWAYS

- A standard byte contains eight binary digits. These are called bits. Initially, a byte could measure anything because systems in earlier days used 6-bit information. Eventually, 8 bits was set as the standard byte size.

- Considering the size of a file, a byte refers to the smallest size of measure in operating systems. There are two major systems to define larger bytes such as those based on powers of 2 and those based on powers of 10.

- A byte is the measurement unit for data, which contains 8 bits or eight zeros and ones. Typically, one byte can be used to signify 28 or 256 different values.

- Spelled as ‘byte’ to avoid confusion with ‘bite’ or ‘bit,’ a nibble or four bits comes before a byte and a kilobit or kilobyte comes after a byte. Larger size and transfer rates in most systems are measured in larger multiples of bytes.

- Byte is used by some common programming languages as a data type as well such as C and C++ and defines the unit as addressable for data storage which can hold a single character.

Understanding Byte

A byte is a unit of measurement for data and was first coined by Werner Buchholz in 1956. However, it became a standard due to the efforts put in by Bob Bemer and others later on.

Talking about history, bytes represent the number of bits a computer system uses for encoding a single character of text.

It is for this reason that it is considered as the minimum addressable unit of memory in most computer architectures.

Typically, a byte represents a unit of data that is 8 bits or Binary Digits long.

In most computers, this unit is used to symbolize a character which can be any of the following:

- A number

- A letter or

- A typographic symbol.

The string of bits in every byte is used to represent a larger unit for applications such as:

- A visual image of a program that consists of images

- A string of bits representing the machine code of any specific computer program.

In some computer systems, four bytes make a word. Depending on the architecture and hardware of a computer, some systems can handle only single-byte instructions, while others can handle two-byte instructions.

For example, a language script typically needs two bytes to signify a character. These sets are referred to as double-byte character sets.

A byte represents 8 bits, but not always. This results in ambiguity.

The Internet Protocol (RFC 791) and a few other network protocol documents refer to an 8-bit byte as an octet.

This is done to remove the ambiguity of these arbitrarily sized bytes from the general 8-bit definition.

Types of Bytes

Though a byte is the smallest unit of memory that is addressable, multiple bytes are used over time to represent larger storage capacity.

As of now, there are eight different types of units of measurement used following the byte. These are:

- Bytes

- Kilobytes

- Megabyte

- Gigabyte

- Terabyte

- Petabyte

- Exabyte

- Zettabyte

- Yottabyte

All these byte multiples are measured in two specific systems, such as:

- Based on the power of 2, also called base-2 or binary and

- Based on a power of 10, also called base-10.

In the base-2 system, numbers are rounded off and expressed. For example, 1 megabyte, which is actually made up of 1,048,576 bytes, is expressed as 1 million bytes.

On the other hand, in the base-10 system, the same number is calculated as powers of 10 and is expressed as 1 million decimal bytes.

Though the difference between the two systems is pretty insignificant, it however seems to widen considerably as the capacity increases.

As a result, the base-10 system is used more commonly today by the manufacturers as well as the consumers.

Here is a list of conversions that will help you to have a better understating of the byte values and other units of data measurement in relation to it, with the measurements smaller than the size of one byte shown in decimals:

- Byte in a Bit (b) – 0.125

- Byte in a Nibble (N) – 0.5

- Byte in a Byte (B) – 1

- Bytes in a Kilobit (Kb) – 125

- Bytes in a Kilobyte (KB) – 1,000

- Bytes in a Kibibit (Kib) – 128

- Bytes in a Kibibyte (KiB) – 1,024

- Bytes in a Megabit (Mb) – 131,072

- Bytes in a Megabyte (MB) – 1,000,000

- Bytes in a Mebibit (Mib) – 131,072

- Bytes in a Mebibyte (MiB) – 1,048,576

- Bytes in a Gigabit (Gb) – 125,000,000

- Bytes in a Gigabyte (GB) – 1,000,000,000

- Bytes in a Gibibit (Gib) – 134,200,000

- Bytes in a Gibibyte (GiB) – 1,073,741,824

- Bytes in a Terabit (Tb) – 125,000,000,000

- Bytes in a Terabyte (TB) – 1,000,000,000,000

- Bytes in a Tebibit (Tib) – 1,099,511,627,776

- Bytes in a Tebibyte (TiB) – 1,099,511,627,776

- Bytes in a Petabit (Pb) – 125,000,000,000,000

- Bytes in a Petabyte (PB) – 1,000,000,000,000,000,000

- Bytes in a Pebibit (Pib) – 140,700,000,000,000

- Bytes in a Pebibyte (PiB) – 1,000,000,000,000,000

- Bytes in an Exabit (Eb) – 125,000,000,000,000,000

- Bytes in an Exabyte (EB) – 1,152,921,504,606,846,976

- Bytes in an Exbibit (Eib) – 144,115,188,000,000,000

- Bytes in an Exbibyte (EiB) – 1,152,921,504,606,846,976

- Bytes in a Zettabyte (ZB) – 1,000,000,000,000,000,000,000

- Bytes in a Yottabyte (YB) – 1,000,000,000,000,000,000,000,000

Byte Examples

There can be different examples of bytes depending on how you think it to be. For example, you can think of it as a single letter, which will be equal to 8 bits, and a word of four letters, such as ‘hope’ would be of 4 bytes or 4×8 =32 bits.

There are also some other practical and common examples of bytes depending on their use and on what it measures.

For example:

- Any basic Latin character will be a byte.

- A text such as “Jabberwocky” will be a kilobyte.

- The text of Harry Potter and the Goblet of Fire will be a megabyte.

- A half an hour long video will be a gigabyte.

- The largest consumer hard drive available in 2007 was a terabyte.

- All MP3-encoded music made in 00 years would measure a petabyte.

- The global monthly traffic on the internet in 2004 would be one Exabyte.

- The global annual internet traffic in 2016 was one zettabyte.

In addition to the above, the data type byte is also used by several common programming languages such as

- C and C++ that defined a byte as an addressable unit of storing data that can hold a single character.

- The primitive data type of Java was also defined as eight bits, or one byte, storing values from −128 to 127.

- C# and other .NET programming languages also use byte as an unsigned data type along with sbyte as the signed data type, which stores values from 0 to 255 and −128 to 127, respectively.

Byte is also used in the data transmission systems as a contiguous progression of bits in a sequential data stream. This represented the smallest and most distinguished data unit.

What is the Size of a Byte?

Ideally, the size of one byte is eight bits or two nibbles. However, it is not specified by any standard and usually depends on the hardware of the computer system and therefore can vary from one system to another.

Initially, the size of a byte could be anything from 1 to 6 bits, because in those days, only 6-bit information was used by the equipment. The 8-bit size became a standard later on when OS/360 came into the picture.

However, depending on whether parity or error correction is required, a byte can be even of 7 bits in size.

Byte vs Bits

- In terms of size, a bit is the smallest unit of measurement that a computer can represent. On the other hand, a byte contains eight of these bits, or a set of eight 0s and 1s.

- In terms of value representation, a byte can represent as many as 256 different values. On the other hand, in comparison, a bit can represent only up to 2 values.

- A byte represents the amount of data in a file or a storage system, but in comparison, a bit typically represents the speed of an internet connection or data and signal transfer.

- The full form of a byte is a Binary Element String, but the full form of a bit is a Binary Digit.

- The symbol for byte is ‘B’ (in upper case), but in comparison, the symbol for bit is ‘b’ (in lower case).

What is the Symbol for 1 Byte?

The symbol for byte as it is defined in the IEC 80000-13, IEEE 1541 and the Metric Interchange Format is the upper case character B.

This means that 1 byte will be denoted in its standard symbol as 1B.

Conclusion

A byte containing 8 bits or a set of eight 0s and 1s is an important unit in the world of computers.

It is used to measure a particular type of data in a file instead of the files themselves.

It was designed originally for storing character data, but over the years it has become the basic measuring unit for data storage.