In This Article

What is Cache Memory?

Cache memory refers to an additional memory system and an extension of the main memory of the computer system. It refers to the fastest memory of the computer usually integrated into the motherboard and embedded in the CPU or RAM.

Cache memory signifies the small, volatile memory that temporarily stores the data and instructions that are accessed frequently by the CPU for quicker processing.

KEY TAKEAWAYS

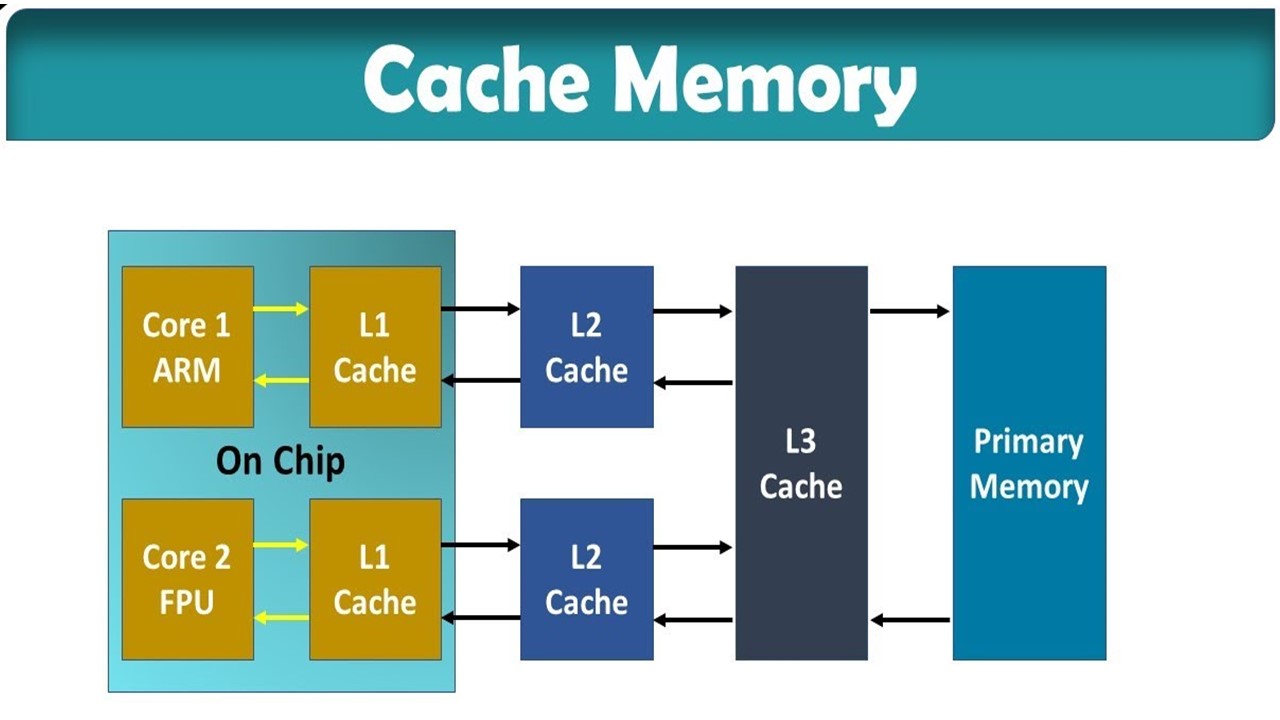

- There are three main types of cache memory namely L1 or Level 1 cache, L2 cache and L3 cache memory with each having different features, location and purpose.

- Cache memory enhances the performance, speed and overall responsiveness of the computer, reduces bottlenecks of the CPU, and ensures faster data access by the CPU to maximize its efficiency.

- Cache memory is mapped in three basic ways such as direct mapping, associative mapping and set associative mapping.

- Cache memory is expensive, cannot be increased unless the CPU is upgraded and offers very little storage space.

Understanding Cache Memory

The cache memory in your PC is a part of the SRAM that the CPU uses to store information about the tasks it has just performed or usually performs daily.

Thus, the processor can work quickly since it doesn’t have to acquire similar data for the same work, over and over.

Cache memory is thus also a type of RAM, but much faster. The more cache memory a CPU can store, the faster it will be.

The difference is more noticeable in multi-core processors, which constantly ask data, and in that case, you will need a bigger cache to supply each of the processors with relevant instructions.

While cache memory is amazingly speedy, it is not as fast as the CPU registers.

But you shouldn’t confuse cache memory with cache, and the later is a different concept altogether which points to the temporary holding of data in your system, while cache memory means the specific hardware component that allows the CPU the memory it needs to function, i.e the SRAM.

Typically, there are two major types of cache memory available that can be further divided into subgroups. These two are:

- Primary Cache – This is small and located on the CPU chip and

- Secondary Cache – This is located between the primary cache and the other memory.

Since cache memory is smaller than the main memory, its location is based on the priority and two types of locality of reference namely:

- Spatial locality of reference which involves the close proximity to the reference point and

- Temporal locality of reference which is least used algorithm and involves loading the complete page when there is a page fault.

Cache memory typically acts as the buffer between the CPU and the RAM and the CPU can only access it.

This reserved portion of the main system memory is costlier but is quite economical as compared to the CPU registers.

Types of Cache Memory

The main kinds of cache memory have been listed below:

- 1. L1 Cache:

The primary or L1 cache is the fastest and the smallest. It is present inside the CPU itself and works with almost the same speed of the processors.

Every core has its own L1 cache which ranges up to 256 kb. In terms of storage, it might be nothing, but regarding processing, it makes massive differences.

In high-end processors, you might also find L1 caches up to 1 MB or so.

Some L1 caches are further divided into two parts.

- Data cache to store data.

- Instruction cache to store instructions

- 2. L2 Cache:

The level 2 cache could both be inside or outside the processor, and there might be separate L2 caches for different cores, or all of the cores might share the same amount of L2 cache.

The memory ranges from 256 kb to 8 MB.

- 3. L3 Cache:

The third kind is available in only the high-performance CPUs, and works to balance and boost the working of the other 2 kinds of caches.

It has a higher capacity, between 8 MB and 50 MB roughly, but is slower than the other two.

There can be even an L4 cache in some of the top-notch multi-core processors, but they do not work any more exclusively. There is however another type of cache that you should know of.

- 4. Victim Cache:

It is usually a fully associative cache that is used to store the cache lines deleted from a particular level of cache.

For example, a victim cache stored between an L1 and L2 cache stores data removed from the L1 cache.

In this case, however, the victim cache will be slower than the L1 cache, but faster than the L2 cache.

This ensures that the processor can get fast access to data even if it has been erased from the L1 cache.

Importance of Cache Memory

The presence of cache memory ensures that the data that you access can be reached out as fast as possible.

You know that the CPU takes data from RAM, and not the hard drive since it is faster.

But after RAM, it is stored in cache memory which is extremely fast, so that any data can be accessed even faster.

When the CPU doesn’t find data in either of the cache memories or the RAM, it looks for the same in the hard drive or SSD, and in that situation, you face a delay.

The speed of SSD or Hard Drive is nothing compared to the speed of the CPU, and this is where the RAM comes in.

But, the cache memory is even faster than the RAM itself.

Suppose, your turn on your computer and then open up Paint or some such application.

Your PC takes some time to open the app after you have clicked the relevant buttons, depending on how fast your system is.

What cache does is it stores all the data that is required to open up Paint, so that the next time you run it, it can be accessed faster.

So, the cache memory is necessary for the following main reasons:

- To reduce bottlenecks of the CPU, and to ensure that the CPU can get data whenever it requires without entering the waiting state.

- To maximize CPU efficiency.

- To increase the overall responsiveness of the system.

How Does It Work?

The processor speed of modern CPUs can be up to 5.0 GHz today roughly, which means that such a CPU can perform billions of clock cycles in a single second.

The cache memory aims to provide the CPU data at hand, because if it has to ask data from the RAM every time, you would have to wait around even for the simplest tasks to take place.

This data is moved from the RAM in fixed sizes called blocks, and stored in cache memory in form of an entry, which contains both the actual data to be stored and the memory address in the RAM relevant to such a data.

The way for running a particular task is that the processor looks for data in the L1 cache at first.

It is the fastest, and when the relevant instructions are found in it, it is called a cache hit.

When not found in the L1 cache, the same is searched in the higher levels of cache like the L2, L3, etc.

When neither of the cache levels has what the CPU is looking for, it is known as a cache miss.

The time elapsed between these searches is the lowest if only the L1 is searched. Modern CPUs have a hit rate of about 95%, meaning that data can be found firsthand in the L1 cache 95 out of 100 times searched.

In case of a cache miss, the CPU then looks for the same in the RAM, and even when not found there, in the storage devices.

In this case, data has to first travel from the storage devices to the RAM, from RAM to the L3 cache, and then subsequent lower levels of cache, and this takes up a considerable amount of time.

Caching usually takes place in 3 of the following ways:

They are:

1) Associative Mapping: In this method, any block of information from the primary memory (RAM) can be situated in any of the cache memory cells. Hence, the processor has to search through the entire cache memory to find out this information, but the hit rate is high.

2) Direct Mapping: In direct mapping, an algorithm is followed and data blocks are stored in the cache memory in only some specific places or a few of the possible places. This reduces the time to search and also saves space and each block of cache memory can store only one block of data taken from the RAM.

3) Set- Associative Mapping: This third method is a bridge between the above two. The cells in cache memory are divided into equal-sized sets. A block of data can thus be mapped in any cache line of a particular set. While this is advantageous, it is quite an expensive method of caching.

The Pros

By now you must have realized how necessary cache memory is. Take a glance at the advantages it provides to you:

1. Better Speed

It doesn’t need to be mentioned that the RAM allows the processor to access files faster.

So, data mapped from the RAM into the cache memory can be processed by the CPU in a few nanoseconds.

This is because the cache memory is multiple times faster than the RAM.

Applications can thus be accessed with greater speed because of the cache memory.

2. Reduces Bottleneck

A bottleneck is a condition where the rest of your system cannot work at the best speed because of a single component, and hence the system processes are either slowed down or stopped for a brief time altogether.

Cache memory doesn’t allow this to happen since the data that the CPU needs is given to it almost immediately, and hence it doesn’t have to wait, thus in turn, reduces the workload of the CPU.

While bottlenecks can never be fully removed, the more you can reduce it the better it is for your PC’s health. This is done by adding more RAM and of course a bigger cache size.

3. Allows maximum utility of the CPU

Even the expensive RAM module can never match the speed of a decent processor and hence this is where cache memory is most advantageous.

The L1 cache works with almost the same speed of the concerned CPU in a system, and hence you can utilize the most of its potential.

The Cons

4. Small Size

The high speed of the cache memory is only possible because of its small size. No matter how expensive or top-class your processor may be, you will never find a lot of it.

5. Expensive

You might know how expensive SRAMs are. So cache memory being a part of it is equally expensive.

The more of it you want to have, the greater you will have to spend. This expense is not a matter of a few dollars, but hundreds of them.

6. Cannot be Increased

Another problem with cache memory is that it can never be increased until you upgrade your CPU.

The respective CPUs have a fixed amount of cache memory decided by their manufacturers that you cannot alter. Thus, more expenses.

Questions & Answers:

How much cache memory is good?

The more cache memory you can have in your system, the better it is. It all depends on how you use your PC and how costly a processor you can afford since high-end processors have up to 64 MB of total L3 cache memory, and even more. For the average user and the average daily usage, 4-6 MB of cache memory should be enough.

Where is the cache memory located?

The cache memory is present either in the processor, or physically very close to it.

Why is cache memory used in a computer?

The cache memory is used in your PC so that the processor has access to data files of a task readily, within a matter of nanoseconds. Had it needed to fetch the same data from the RAM of any of the other storage drives, the process would have been extremely time-consuming.

Can we delete cache memory?

No, as we said cache memory refers to the component of storage, and not the data itself. So as it is present on the CPU you cannot delete the cache memory. It gets refreshed each time you restart your computer.

You can, however, delete the cached data that gets stored on your device as you use applications and browse around the internet. At some point, it becomes necessary to do so, or else your system slows down. But the two are different.

Can a computer run without cache memory?

No, a CPU cannot run without cache. And without the CPU, there is no computer.

Is cache memory important?

Yes, it is one of the fundamental aspects of the CPU. No matter how cheap the CPU may be, it always has some cache memory. Without it, the CPU will need to take data from the RAM, which is slower than cache memory.

How often should you clear your cache?

The cache, or the temporary files, must be cleared at some regular interval as you deem fit. That may be a week, a month whatever you choose but if too much of it accumulates your system will show signs of sluggishness. That would be the alarm that you should remove cache junk right away.

How do you check your cache memory?

One of the processes of checking cache memory is similar on both Windows 7 and 10 and it is relatively simple. What you need to do is open the command prompt on your PC and then type wmic cpu, get L2CacheSize, L3CacheSize, and then press the Enter key.

You may also check cache memory through the task manager and then hit the performance tab. Choose the CPU over there and you will find your CPU's cache memory on the screen.

Also, if you know the model of your processor, then Google it and you should find what you’re looking for online.

Is higher cache memory better?

The more cache memory your CPU has, the better equipped it will be to handle high-end tasks. But going for a lot of cache memory (by a lot we mean a few more MBs) will also have a significant effect on your budget as SRAM is very costly. So, it depends upon your usage pattern.

Which type of cache memory is the most efficient?

The L1 cache is the most efficient because it is inside the CPU and works at the same speed, so you can imagine how fast that is. Anything stored in the cache memory is first stored here, and then in the other levels like L2, L3, etc.

Conclusion

We hope we were able to give you the relevant information you were looking for regarding cache memory. Now that you know how it works, go utilize the most of it!