In This Article

What is Concurrent Computing?

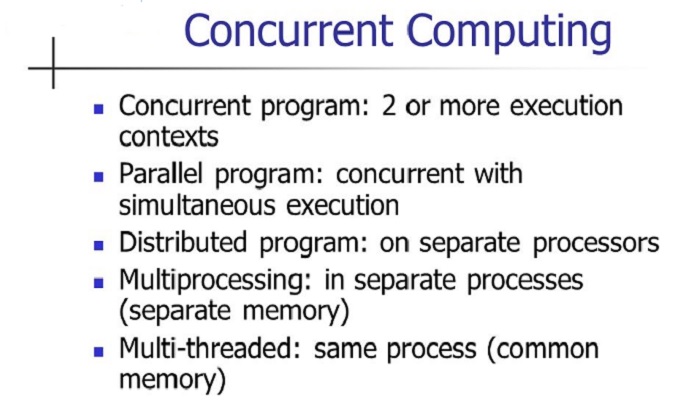

Concurrent computing, or concurrency, refers to a process where several calculations within the same time frame are made. It describes the process of managing several threads or programs on a single computing system.

Concurrency is based on the concept of multiple processes or threads that help in performing a task without needing to wait for the others to be completed.

In simple words, concurrent computing implies the general approach to carrying out and writing computer programs.

KEY TAKEAWAYS

- Concurrent computing refers to the process where a single computing system manages various programs or threads.

- Concurrency is the process that uses alternating timeshared wedges wherein the operations seem to be executed in parallel but actually are not.

- The concurrent processes offer a major alternative for computer systems or programs that need to emulate parallel processing but not build multi-core designs.

- Concurrency is a property of a system, which may be a computer, a network of computers, or programs.

- Each process in this form of computing has a distinct execution point or thread of control and therefore each computation can move ahead without waiting for the other processes to be completed.

Understanding Concurrent Computing

Concurrent computing is the technique where a single system or a set of systems make several calculations at the same time.

The basic concept behind this specific type of computing is to run different instruction sets or threads according to a specified schedule.

Talking about the concept of concurrent computing, it is quite interesting.

It all started with the rail track operators, who had to manage multiple trains running on a single rail track and ensure that all the trains could use it but also ensure that accidents are still avoided.

It is this concept followed as early as the 1800s that is used in concurrent computing.

As such, in this process the programs actually run independently of the main or the parent process.

The components or the systems work in unison, but, for that, one or all of them do not need to wait for the previous tasks to be completed.

Concurrent computing is unique and should not be mixed with synchronous or sequential computing or even with parallel computing.

This is because both of these are different from concurrent computing as follows:

- In sequential computing, computations are made in a sequential manner, one after the other, where one process waits for the earlier one to be completed and

- In parallel computing, the computations are made on different processors at the same time.

Ideally, concurrent computing can be considered as a type of modular programming wherein the entire computation process is divided into several sub-computations or parts.

All these parts can be carried out independently and concurrently.

However, the exact timing of when a specific sub-computation in a concurrent system will be carried out depends entirely on the scheduling.

This means that all these tasks may not be carried out and completed at the same time.

For example, if there are two tasks T1 and T2, they can be handled as follows:

- T1 may be performed and completed before T2

- T2 may be performed and finished before T1

- T1 and T2 may be carried out alternately as serial and concurrent and

- Both T1 and T2 may be performed concurrently at the same point in time.

It all depends on a few specific factors such as:

- The activities performed by the other processes

- The scheduling procedure of the operating system and

- The way in which the operating system deals with interrupts.

The different tasks performed in concurrent computing can be handled differently by separate computers, or by particular applications or across networks.

There are different Concurrency Oriented Programming Languages or COPL used, but the most common ones are Java and C#.

And, at the industry level, Limbo, Erlang, and Occam are perhaps the most commonly used languages for message-passing concurrency models.

Concurrent Computing in Cloud Computing

Concurrent computing is a very useful and productive technique in cloud computing and it is a process used more often in Big Data environments.

This is mainly because concurrency helps in handling larger sets of data, which is fundamental to cloud computing environments.

In order to make concurrent computing work effectively and efficiently, different systems within the Big Data architecture need to have careful coordination related to their tasks which include:

- Exchange of data

- Scheduling different tasks and

- Allocation of memory.

This organized process helps in handling and delivering computations in very high volumes in the form of transactions with the help of multi-core systems.

However, apart from offering several useful benefits, there is a major downside to concurrent computing in cloud computing, which is the complexity involved in developing it. More often than not, concurrency software contains bugs that are quite hard to detect and may be notoriously difficult to address.

Other significant issues of concurrent computing also apply such as:

- Allotment of global resources

- Optimal sharing of resources

- Tracing programming errors

- Locking or barring a channel

- Protection of multiple threads or processes from each other during extra series of operations within the operating system

- Running too many applications which degrades the performance

- Switching among applications

- Handling non-atomic and interruptible processes

- Processes being blocked for a long time due to non-availability of resources or waiting for resources from the terminal

- Perpetual denial of required resources for a process to work due to scheduling errors, resource leaks or mutual exclusion algorithm that causes starvation and

- Deadlock state in computing where each member waits for the other members in the group to take an action such as releasing a lock or sending a message.

Moreover, there should be a consistency model, also known as a memory model, used in multiprocessor programs and concurrent programming languages.

This is required to define the rules of the occurrence of the operations on the computer memory as well as the ways in which the results are to be produced.

What Are the Advantages of Concurrent Computing?

One of the most significant advantages of concurrent computing is that it enhances the throughput of the program. This is because a larger number of simultaneous programs and tasks can be completed within a specific time period.

This increases the number of processing proportionally according to Gustafson Law.

A few other benefits of concurrency are:

- It lets overlapping of input and output data and calculations by making the best use of the available power of the Central processing Unit or CPU

- It improves the responsiveness of the programs to the respective commands due to the use of several threads

- It saves time since it does not have to wait for earlier operations to be completed to deliver the result

- It allows proper utilization of time because the saved waiting time can be used for completing other processes

- It offers a more precise and suitable program structure for a few specific problems and problem domains

- It allows easier management of threads and exchange of data between the multiple threads than in IPC or Inter Process Communication

- It is more affordable since it carries less overhead because all communications for and during the process are made within one single processor

- It allows running several applications at the same time

- It allows better utilization of system resources and

- It enhances the performance of the operating system.

Is Multithreading Concurrent or Parallel Computing?

Multithreading usually involves several cores of the processor and, therefore, is in fact parallel. In this process, each of the microprocessors works as one to accomplish the end result much more efficiently and effectively, making the process multiple parallel. On the other hand, concurrent tasks happen all at once.

However, there is more to understand in this regard.

In a multithreaded process where a multiprocessor with shared memory is used, every thread may run on a different processor at the same time during the process. This actually results in a parallel execution.

On the other hand, if the multithreaded process involves a single processor, then the resources required for the specific execution may be switched between the threads by the process.

This will however, result in a concurrent execution. This is because more than one thread will be involved in the progress of the process, but these may actually run at the same time.

When a multithreaded program is used, the entire work is usually divided into several parts, and these parts can overlap in time.

This means that these can be run at the same time if there are various processors available to assign them to.

Otherwise, in the absence of multiple processors, these parts can be interleaved in time as well by a single processor.

Therefore, it can be said that multithreading is a particular process that represents concurrent computation in a program, which further allows the process to run in parallel.

It is certainly not about picking one or the other. This is because the former is related to the independence of tasks, while the latter refers to a specific mode of operation.

What Are the Concurrency Types?

There are basically three main types of concurrent computing. These are threading, asynchrony, and preemptive multitasking.

Threading:

In this type, it is the operating system or the program that runs on several different threads at the same time. This process allows the system to save on wait time and user time and also make the best use of the CPU power.

Asynchrony:

This is the type where the processes or programs work separately to gather and analyze data from different databases, for example.

The correlations needed here may take some time and therefore the program can carry on with supplementary and required steps to finish the task while waiting for the results of data gathering and analysis to come up.

This eventually reduces the time taken to complete any task by the system as well as the user because the program works on different processes at the same time.

Preemptive multitasking:

In this particular process, the operating system of the computer can switch between software.

This is good in the sense that it minimizes the chances of the program taking total control of the processor of the computer.

This means that all of the programs can continue running without crashing or freezing.

Each of these types has different attributes and special precautions must be taken to avert race conditions so that different processes or threads do not access and use the similar shared data in the memory in an inappropriate order.

This will ensure that none of the processes is influenced by an unrestrained series of events resulting from a different process.

Why is Concurrency Used?

Using concurrency helps the resources of the CPU to be used in the best possible way. It also helps the operating system to run many different applications at the same time.

Ideally, the resources of the CPU would have been wasted if these were used by only one single application.

Moreover, every application would be needed to be completed first so that the next one can be started.

This would have resulted in wait time and delay in performance.

As for the operating systems especially, they can use different methods of concurrency to process concurrent programs in a single operating system procedure.

This helps in establishing an explicit communication between the concurrent components in two specific ways such as:

- Shared memory communication, where the contents of the memory locations shared by the concurrent components are altered in order to communicate with each other and the threads and

- Message passing communication, where messages are exchanged by the concurrent components in order to communicate, which may be done asynchronously or by using a synchronous rendezvous style where it is blocked by the sender until a message is received.

Each of these processes, however, has its own respective performance characteristics, and these are different.

However, message passing concurrency is more preferred over shared memory communication for the following reasons:

- It is easier to reason

- It is a more robust form

- Availability of a wider variety of mathematical theories and process calculi to analyze and understand the systems

- It is more efficient due to symmetric multiprocessing and

- Task switching and memory overhead are low for each process.

In fact, concurrent computing is so pervasive in computing that it is used almost everywhere such as in low-level hardware on a single chip, at the programming language level including channel, Coroutine, futures and promises and ate the networked system level which are usually concurrent by their nature.

Concurrent Computing Vs Parallel Computing

- Concurrent computing refers to a system or a process where parts of each process are queued together, but traditional parallel computing refers to carrying out several programs at the same specific moment

- In concurrency, a process shares a revolving focus on the part of the only processor, but, in contrast, parallel computing is usually performed by using multiprocessor or multicore designs

- Concurrent processing involves user-interactive programs and IO operations that prevent wastage of cycles, while parallel computing involves distributed data processing in the large scale on different clusters using parallel processors

- Concurrency depends on different aspects of executing a program and therefore can give different results making it non-deterministic in nature as compared to parallel computing where programs use a deterministic model that gives only a single result all the time

- Concurrent computing is basically about interruptions, while parallel computing, on the other hand, is all about isolation

- Concurrency is accomplished by using threading, but, in comparison, parallel computing is achieved through multitasking

- Concurrent computing boosts the amount of work completed at a given point in time, but parallel computing enhances the speed of computation as well as the throughput of the system and

- Debugging is pretty hard in concurrency computing but, in comparison, it is easier in the case of parallel computing.

Conclusion

Concurrent computing is very useful and persistent in the world of computers because it enables the users to make the best use of the available resources and power of the Central Processing Unit.

It saves a lot of time in the process, which is used for other tasks, eventually enhancing the overall performance of the system.