In This Article

What is Context Switching in OS?

Context switching in the operating system signifies the storage of the state or context of a specific process in such a way that it can be reloaded whenever it is needed to resume execution right from where it was left.

In technical terms, this is a process that involves multitasking with the operating system that allows sharing only one CPU by several processes and by switching between them.

It simply eliminates the need for any additional processors in the given computer system.

KEY TAKEAWAYS

- Context switching refers to the mechanism that allows the use of a single CPU by multiple processes giving the user an impression that there are multiple CPUs in the system.

- The status of the current process is stored in the PCB during context switching to retrieve it easily later on when needed to start working on it.

- The context switching status stored in this process includes the stack pointer, the value of the Program Counter, and the data information that was held by the registers.

- This process typically happens before the control of the CPU passes from the old process to the new one.

- Such switching happens during interrupt handling, multiprocessing and switching to kernel mode from user mode and the CPU is allotted a new process.

Understanding Context Switching in Operating System

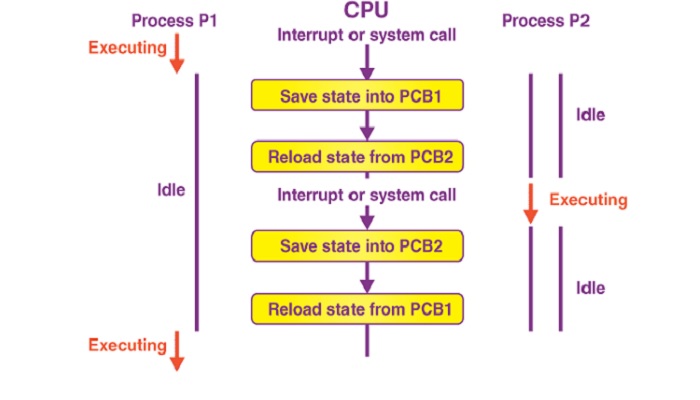

Context switching allows multiple processes to use a single CPU which helps in multitasking. This process of switching actually involves:

- Storing the status of the old process and

- Assigning the CPU a new process to execute.

In this mechanism, the old process waits in a queue when the new process is running and can start executing when another process happens to stop.

In computing, context switching plays a very significant role in ensuring that the system operates at the highest level.

As said earlier, its primary intent is to share the available resources of the only CPU in the system while executing several processes.

And, it is this specific feature that enhances the overall performance of the system further, in several ways.

First of all, context switching saves data of the current process before making a switch.

This prevents the loss of data. You can continue working on the same process later on right from where you left off.

This will save a lot of time for you as well as the resources of the system since you will not have to start doing everything right from the very beginning.

Further, when there is any interrupt in the operating system, the stored data will meet all your computational needs.

Most importantly, context switching will not need multiple CPUs to handle multiple processes.

The only CPU in your computer system will be able to handle all of those.

Also, by preserving the state of the current processes while switching, it will create a ready queue of processes at the same time that are to be executed. Each of these processes can be taken on and completed, based on the priority.

Using I/O resources is pretty difficult in these situations but context switching ensures proper allocation of I/O resources as well.

Once again, the priority factor comes into play here, which not only increases the efficiency of the system, but also helps in handling the OS interrupts much more proficiently.

What Causes Context Switching?

There are a few specific situations that will trigger context switching in the operating system such as when a higher priority thread may have become ready to run or the time slice has passed. It may also happen when a running thread has to wait.

Here are some major factors that may trigger context switching:

- Interrupts – If there is an interrupt when the processor requests for the data reading from the disk, context switching may happen, where the mechanism will switch a part of the context and hardware automatically which requires less time to handle such interrupts.

- Multitasking – It is quite common for context switching to happen while multitasking where a current process is switched from the CPU to let another process use it to run. However, the process may be switched by a scheduler on a pre-emptive system.

- Kernel to user switch – This is the process where the operating system itself needs to switch between the user mode and the kernel mode.

What Are the Steps of Context Switching?

Context switching follows a few specific steps while switching between two processes in different stages. Typically, the initial ones on the currently running process in the CPU include saving the process, updating all important fields, updating the process control block, moving the block to a ready queue, and selecting a new process to be executed.

While selecting a new process, the steps followed are as follows:

- Finding the process of high priority in the queue that contains ready threads

- Removing it to the beginning of the queue

- Loading its context and

- Executing it.

When this process is done executing, the context of the earlier running process is restored or loaded again on the CPU.

This is done by simply loading the earlier values of the registers and the process control block created before.

In addition to changing processes based on higher priority, context switching also updates the memory management data structures as and when it is necessary.

What Are the Different Types of Context Switching?

Ideally, there are two basic types of context switches such as synchronous context switching and asynchronous context switching.

Synchronous context switch:

This type of context switching happens when a task is suspended explicitly by itself when an OS interface is called upon which may cause a task to block.

Asynchronous context switch:

This form of context switching is generated especially when there is an interrupt in the system and some actions are needed to handle them.

While it is generated, the state of the task that is interrupted is saved while the interrupt handler enters, and when it exits, the state of a different task is restored.

Context Switching Examples

One most common example of context switching is when a process is running and is being executed on the CPU, another arrives in the ready queue.

This may have a higher priority and need the CPU to complete the task. It is then that a context switch will be made.

The status of the current process is stored in the Process Control Block and in the registers. This status is retrieved and reloaded by the OS to switch between two processes, resulting in a context switch as well.

What is Context Switch Time in OS?

A context switch time refers to the interval between two processes. This means the time that it takes to send the current process to the waiting state and bring up a waiting process for execution. This usually happens during multitasking.

Ideally, a context switch time involves:

- Saving and restoring register context

- Switching context of the memory manager and

- Restoring and saving kernel context.

In order to find out the difference between the two times, perhaps the best method is to record the timestamp of the end instruction of a process and the timestamps of the beginning of a process. You will also need to note the waiting time in the ready queue.

If the total time of execution is T, the context switch time can be obtained by using the following formula:

Context switch time = T – {Sum of all processes (waiting time + execution time)}.

To express it mathematically, you may take two processes, P1 and P2, and make a couple of assumptions as follows:

- Assume that P1 is the process in execution and P2 is the waiting process in the queue and

- Also assume that the switch happens at the n-th instruction of P1 and the timestamp is indicated as t(x, k) in microseconds of the k-th instruction of process x.

In such normal situations, the context switch time will be: t(2, 1) – t(1, n).

In specific conditions, things will happen as follows:

- P1 marks its starting time

- P2 blocks the waiting data from P1

- P1 sends a token to P2

- P2 receives the token and is scheduled

- P2 reads the token from P1 which induces a context switch

- P2 sends a response token to P1

- P1 reads the response token sent by P2 which also induces the context switch

- P1 receives the token and is scheduled and

- P1 marks the end time.

If Td and Tr are the respective times of delivering and receiving the data token and Tc is the time to make a context switch, P1 will record the time of token delivery as well as that of the response.

The time elapsed, T, in such a situation will be:

T = 2 * (Td + Tc + Tr)

This formula is based on the following events:

- P1 sends the data token

- CPU switches the context

- P2 receives it and sends the response token

- CPU switches context and, finally

- P1 receives it.

If the time is needed to be reduced, you can do it in different ways such as:

- Getting hardware support from the MMU or Memory Management Unit to store the context state

- Getting hardware support to shuffle a register file and

- Lowering the amount of context in a process.

How Many Context Switches Are Needed?

Ideally, there are only two context switches needed by an operating system when it uses the shortest residual time for the first scheduling algorithm.

An example will make things clear to you. Suppose, the conditions are as follows:

- There are only three CPU-intensive processes namely, P0, P1 and P2 considered

- The CPU burst time units are 10, 20 and 30 for each, respectively and

- The arrival time is 0, 2 and 6, respectively.

It will work like this:

- P0 will be the only process available when the time is 0 and therefore it will run

- When P1 arrives at time 2, P0 will continue running because it has the shortest residual time and

- When P2 arrives at time 6, even then P0 will continue running since it has the shortest outstanding time.

However, P1 is scheduled at time 10 because that is the shortest residual time process. And, at time 30, P2 will be scheduled.

All these mean that there will be only two context switches necessary such as one from P0 to P1, and the other from P1 to P2.

How Fast is Context Switching?

On an average, every context switch takes the kernel approximately 5 μs to process. However, there may be extra execution time added which may result due to the cache misses. This amount of time is very hard to quantify.

Ideally, nothing can be said with regard to the speed of context switching because the higher the frequency of these switches, the more will be the degradation in the CPU utilization.

Still, you can take a context switch time to range anywhere between a couple of hundred nanoseconds and a few microseconds.

However, everything will depend on the architecture of the Central Processing Unit or the CPU as well as the size of the context that is to be restored and saved.

What is the Impact of Context Switching?

Context switching puts an extra workload on the operating system, which, in turn, slows down the process and lowers the overall productivity. This is mainly due to the disadvantages of context switching as a result of its operational features.

Typically, the bulk of the burden is on the kernel which now needs to track the resources that are being used by each of the processes in question. This includes:

- I/O actions

- Memory allocations

- Open files

- Buffer ownership and more.

This also adds to the time taken for a process to complete because a few cache flushes need to be done to get rid of the old process after storing. After that, the cache has to be reloaded again with the new process, which, once again, adds to the time factor.

All these simply result in a drag on productivity and it also slows down the processing of the new process.

Apart from the effects on resources, time, and productivity, context switching also increases the cost of overheads due to several reasons and activities which include but are not limited to:

- TLB or Translation Lookaside Buffer flushes

- Cache sharing between various tasks

- Running the task scheduler and more.

Also, the CPU remains idle during the time of switching context, which is a significant overhead.

Advantages

- The system can share one CPU for multiple processes

- The users think that the system has multiple CPUs and therefore helps them in multitasking

- It is pretty fast for any user to notice that processes are being switched and

- Due to time sharing, it helps in multiprogramming.

Conclusion

Context switching in the operating system is an important feature that allows the users to multitask.

Several processes can be executed in quick time on a single CPU apparently at the same time without any interference or conflict with each other.

This mechanism, in fact, makes the computer much more user-friendly.